Examining Operations Security

![]() Operations security is concerned with the day-to-day practices necessary to first deploy and later maintain a secure system. This section examines these principles.

Operations security is concerned with the day-to-day practices necessary to first deploy and later maintain a secure system. This section examines these principles.

Secure Network Life Cycle Management

Secure Network Life Cycle Management

![]() The responsibilities of the operations team pertain to everything that takes place to keep a network, computer systems, applications, and the environment up and running in a secure and protected manner. After the network is set up, the operation tasks begin, including the continual day-to-day maintenance of the environment. These activities are regular in nature and enable the environment, systems, and applications to continue to run correctly and securely.

The responsibilities of the operations team pertain to everything that takes place to keep a network, computer systems, applications, and the environment up and running in a secure and protected manner. After the network is set up, the operation tasks begin, including the continual day-to-day maintenance of the environment. These activities are regular in nature and enable the environment, systems, and applications to continue to run correctly and securely.

![]() Operations within a computing environment can pertain to software, personnel, and hardware, but an operations department often focuses only on the hardware and software aspects. Management is responsible for the behavior and responsibilities of employees. The people within operations are responsible for ensuring that systems are protected and that they continue to run in a predictable manner.

Operations within a computing environment can pertain to software, personnel, and hardware, but an operations department often focuses only on the hardware and software aspects. Management is responsible for the behavior and responsibilities of employees. The people within operations are responsible for ensuring that systems are protected and that they continue to run in a predictable manner.

![]() The operations team usually has the following objectives:

The operations team usually has the following objectives:

-

Preventing reoccurring problems

Preventing reoccurring problems -

Reducing hardware failures to an acceptable level

Reducing hardware failures to an acceptable level -

Reducing the impact of hardware failure or disruption

Reducing the impact of hardware failure or disruption

![]() This group should investigate any unusual or unexplained occurrences, unscheduled initial program loads, deviations from standards, or other odd or abnormal conditions that take place on the network.

This group should investigate any unusual or unexplained occurrences, unscheduled initial program loads, deviations from standards, or other odd or abnormal conditions that take place on the network.

![]() Including security early in the information process, in the system design life cycle (SDLC), usually results in less-expensive and more-effective security when compared to adding it to an operational system.

Including security early in the information process, in the system design life cycle (SDLC), usually results in less-expensive and more-effective security when compared to adding it to an operational system.

![]() A general SDLC includes five phases:

A general SDLC includes five phases:

-

Initiation

Initiation -

Acquisition and development

Acquisition and development -

Implementation

Implementation -

Operations and maintenance

Operations and maintenance -

Disposition

Disposition

![]() Each of these five phases includes a minimum set of security steps that you need to effectively incorporate security into a system during its development. An organization either uses the general SDLC or develops a tailored SDLC that meets their specific needs. In either case, the National Institute of Standards and Technology (NIST) recommends that organizations incorporate the associated IT security steps of this general SDLC into their development process.

Each of these five phases includes a minimum set of security steps that you need to effectively incorporate security into a system during its development. An organization either uses the general SDLC or develops a tailored SDLC that meets their specific needs. In either case, the National Institute of Standards and Technology (NIST) recommends that organizations incorporate the associated IT security steps of this general SDLC into their development process.

Initiation Phase

![]() The initiation phase of the SDLC includes the following:

The initiation phase of the SDLC includes the following:

-

Security categorization: This step defines three levels, such as low, moderate, and high, of potential impact on organizations or individuals should a breach of security occur (a loss of confidentiality, integrity, or availability). Security categorization standards help organizations make the appropriate selection of security controls for their information systems.

Security categorization: This step defines three levels, such as low, moderate, and high, of potential impact on organizations or individuals should a breach of security occur (a loss of confidentiality, integrity, or availability). Security categorization standards help organizations make the appropriate selection of security controls for their information systems. -

Preliminary risk assessment: This step results in an initial description of the basic security needs of the system. A preliminary risk assessment should define the threat environment in which the system will operate.

Preliminary risk assessment: This step results in an initial description of the basic security needs of the system. A preliminary risk assessment should define the threat environment in which the system will operate.

Acquisition and Development Phase

![]() The acquisition and development phase of the SDLC includes the following:

The acquisition and development phase of the SDLC includes the following:

-

Risk assessment: This step is an analysis that identifies the protection requirements for the system through a formal risk-assessment process. This analysis builds on the initial risk assessment that was performed during the initiation phase, but is more in depth and specific.

Risk assessment: This step is an analysis that identifies the protection requirements for the system through a formal risk-assessment process. This analysis builds on the initial risk assessment that was performed during the initiation phase, but is more in depth and specific. -

Security functional requirements analysis: This step is an analysis of requirements and can include the following components: system security environment, such as the enterprise information security policy and enterprise security architecture, and security functional requirements.

Security functional requirements analysis: This step is an analysis of requirements and can include the following components: system security environment, such as the enterprise information security policy and enterprise security architecture, and security functional requirements. -

Security assurance requirements analysis: This step is an analysis of the requirements that address the developmental activities required and the assurance evidence needed to produce the desired level of confidence that the information security will work correctly and effectively. The analysis, based on legal and functional security requirements, is used as the basis for determining how much and what kinds of assurance are required.

Security assurance requirements analysis: This step is an analysis of the requirements that address the developmental activities required and the assurance evidence needed to produce the desired level of confidence that the information security will work correctly and effectively. The analysis, based on legal and functional security requirements, is used as the basis for determining how much and what kinds of assurance are required. -

Cost considerations and reporting: This step determines how much of the development cost you can attribute to information security over the life cycle of the system. These costs include hardware, software, personnel, and training.

Cost considerations and reporting: This step determines how much of the development cost you can attribute to information security over the life cycle of the system. These costs include hardware, software, personnel, and training. -

Security planning: This step ensures that you fully document any agreed upon security controls, whether they are just planned or in place. The security plan also provides a complete characterization or description of the information system and attachments or references to key documents that support the information security program of the agency. Examples of documents that support the information security program include a configuration management plan, a contingency plan, an incident response plan, a security awareness and training plan, rules of behavior, a risk assessment, a security test and evaluation results, system interconnection agreements, security authorizations and accreditations, and a plan of action and milestones.

Security planning: This step ensures that you fully document any agreed upon security controls, whether they are just planned or in place. The security plan also provides a complete characterization or description of the information system and attachments or references to key documents that support the information security program of the agency. Examples of documents that support the information security program include a configuration management plan, a contingency plan, an incident response plan, a security awareness and training plan, rules of behavior, a risk assessment, a security test and evaluation results, system interconnection agreements, security authorizations and accreditations, and a plan of action and milestones. -

Security control development: This step ensures that the security controls that the respective security plans describe are designed, developed, and implemented. The security plans for information systems that are currently in operation may call for the development of additional security controls to supplement the controls that are already in place or the modification of selected controls that are deemed less than effective.

Security control development: This step ensures that the security controls that the respective security plans describe are designed, developed, and implemented. The security plans for information systems that are currently in operation may call for the development of additional security controls to supplement the controls that are already in place or the modification of selected controls that are deemed less than effective. -

Developmental security test and evaluation: This ensures that security controls that you develop for a new information system are working properly and are effective. Some types of security controls, primarily those controls of a nontechnical nature, cannot be tested and evaluated until the information system is deployed. These controls are typically management and operational controls.

Developmental security test and evaluation: This ensures that security controls that you develop for a new information system are working properly and are effective. Some types of security controls, primarily those controls of a nontechnical nature, cannot be tested and evaluated until the information system is deployed. These controls are typically management and operational controls. -

Other planning components: This step ensures that you consider all the necessary components of the development process when you incorporate security into the network life cycle. These components include the selection of the appropriate contract type, the participation by all the necessary functional groups within an organization, the participation by the certifier and accreditor, and the development and execution of the necessary contracting plans and processes.

Other planning components: This step ensures that you consider all the necessary components of the development process when you incorporate security into the network life cycle. These components include the selection of the appropriate contract type, the participation by all the necessary functional groups within an organization, the participation by the certifier and accreditor, and the development and execution of the necessary contracting plans and processes.

Implementation Phase

![]() The implementation phase of the SDLC includes the following:

The implementation phase of the SDLC includes the following:

-

Inspection and acceptance: This step ensures that the organization validates and verifies that the functionality that the specification describes is included in the deliverables.

Inspection and acceptance: This step ensures that the organization validates and verifies that the functionality that the specification describes is included in the deliverables. -

System integration: This step ensures that the system is integrated at the operational site where you will deploy the information system for operation. You enable the security control settings and switches in accordance with the vendor instructions and the available security implementation guidance.

System integration: This step ensures that the system is integrated at the operational site where you will deploy the information system for operation. You enable the security control settings and switches in accordance with the vendor instructions and the available security implementation guidance. -

Security certification: This step ensures that you effectively implement the controls through established verification techniques and procedures. This step gives organization officials confidence that the appropriate safeguards and countermeasures are in place to protect the information system of the organization. Security certification also uncovers and describes the known vulnerabilities in the information system.

Security certification: This step ensures that you effectively implement the controls through established verification techniques and procedures. This step gives organization officials confidence that the appropriate safeguards and countermeasures are in place to protect the information system of the organization. Security certification also uncovers and describes the known vulnerabilities in the information system. -

Security accreditation: This step provides the necessary security authorization of an information system to process, store, or transmit information that is required. This authorization is granted by a senior organization official and is based on the verified effectiveness of security controls to some agreed upon level of assurance and an identified residual risk to agency assets or operations.

Security accreditation: This step provides the necessary security authorization of an information system to process, store, or transmit information that is required. This authorization is granted by a senior organization official and is based on the verified effectiveness of security controls to some agreed upon level of assurance and an identified residual risk to agency assets or operations.

Operations and Maintenance Phase

![]() The operations and maintenance phase of the SDLC includes the following:

The operations and maintenance phase of the SDLC includes the following:

-

Configuration management and control: This step ensures that there is adequate consideration of the potential security impacts due to specific changes to an information system or its surrounding environment. Configuration management and configuration control procedures are critical to establishing an initial baseline of hardware, software, and firmware components for the information system and subsequently controlling and maintaining an accurate inventory of any changes to the system.

Configuration management and control: This step ensures that there is adequate consideration of the potential security impacts due to specific changes to an information system or its surrounding environment. Configuration management and configuration control procedures are critical to establishing an initial baseline of hardware, software, and firmware components for the information system and subsequently controlling and maintaining an accurate inventory of any changes to the system. -

Continuous monitoring: This step ensures that controls continue to be effective in their application through periodic testing and evaluation. Security control monitoring, such as verifying the continued effectiveness of those controls over time, and reporting the security status of the information system to appropriate agency officials is an essential activity of a comprehensive information security program.

Continuous monitoring: This step ensures that controls continue to be effective in their application through periodic testing and evaluation. Security control monitoring, such as verifying the continued effectiveness of those controls over time, and reporting the security status of the information system to appropriate agency officials is an essential activity of a comprehensive information security program.

Disposition Phase

![]() The disposition phase of the SDLC includes the following:

The disposition phase of the SDLC includes the following:

-

Information preservation: This step ensures that you retain information, as necessary, to conform to current legal requirements and to accommodate future technology changes that can render the retrieval method of the information obsolete.

Information preservation: This step ensures that you retain information, as necessary, to conform to current legal requirements and to accommodate future technology changes that can render the retrieval method of the information obsolete. -

Media sanitization: This step ensures that you delete, erase, and write over data as necessary.

Media sanitization: This step ensures that you delete, erase, and write over data as necessary. -

Hardware and software disposal: This step ensures that you dispose of hardware and software as directed by the information system security officer.

Hardware and software disposal: This step ensures that you dispose of hardware and software as directed by the information system security officer.

Principles of Operations Security

Principles of Operations Security

![]() Certain core principles are part of the secure operations that are intended for information systems security (infosec). The following are among these principles:

Certain core principles are part of the secure operations that are intended for information systems security (infosec). The following are among these principles:

-

Separation of duties

Separation of duties -

Two-man control

Two-man control -

Dual operator

Dual operator

-

-

Rotation of duties

Rotation of duties -

Trusted recovery, which includes the following:

Trusted recovery, which includes the following:-

Failure preparation

Failure preparation -

System recovery

System recovery

-

-

Change and configuration controls

Change and configuration controls

Separation of Duties

![]() Separation of duties (SoD) is one of the key concepts of internal control and is the most difficult and sometimes the most costly control to achieve. SoD states that no single individual should have control over two or more phases of a transaction or operation, which makes deliberate fraud more difficult to perpetrate because it requires the collusion of two or more individuals or parties.

Separation of duties (SoD) is one of the key concepts of internal control and is the most difficult and sometimes the most costly control to achieve. SoD states that no single individual should have control over two or more phases of a transaction or operation, which makes deliberate fraud more difficult to perpetrate because it requires the collusion of two or more individuals or parties.

![]() The term SoD is already well-known in financial systems. Companies understand not to combine roles such as receiving checks, approving discounts, depositing cash and reconciling bank statements, approving time cards, and so on.

The term SoD is already well-known in financial systems. Companies understand not to combine roles such as receiving checks, approving discounts, depositing cash and reconciling bank statements, approving time cards, and so on.

![]() In information systems, segregation of duties helps to reduce the potential impact from the actions/inactions of one person. You should organize IT in a way that achieves adequate SoD.

In information systems, segregation of duties helps to reduce the potential impact from the actions/inactions of one person. You should organize IT in a way that achieves adequate SoD.

| Note |

|

![]() The two-man control principle uses two individuals to review and approve the work of the other. This principle provides accountability and reduces things such as fraud. Because of the obvious overhead involved, this practice is usually limited to sensitive duties considered potential security risks.

The two-man control principle uses two individuals to review and approve the work of the other. This principle provides accountability and reduces things such as fraud. Because of the obvious overhead involved, this practice is usually limited to sensitive duties considered potential security risks.

![]() The dual-operator principle differs from the two-man control because the task involved actually requires two people. An example of the dual-operator principle is a check that requires two signatures for the bank to accept it, or the safety deposit bank where you have one key and the bank clerk has the second key.

The dual-operator principle differs from the two-man control because the task involved actually requires two people. An example of the dual-operator principle is a check that requires two signatures for the bank to accept it, or the safety deposit bank where you have one key and the bank clerk has the second key.

| Note |

|

Rotation of Duties

![]() Rotation of duties is sometimes called job rotation. To successfully implement this principle, it is important that individuals have the training necessary to complete more than one job. Peer review is usually included in the practice of this principle.

Rotation of duties is sometimes called job rotation. To successfully implement this principle, it is important that individuals have the training necessary to complete more than one job. Peer review is usually included in the practice of this principle.

![]() For example, suppose that a job-rotation scheme has five people rotating through five different roles during the course of a week. Peer review of work occurs whether or not it was intended. When five people do one job in the course of the week, each person is effectively reviewing the work of the others.

For example, suppose that a job-rotation scheme has five people rotating through five different roles during the course of a week. Peer review of work occurs whether or not it was intended. When five people do one job in the course of the week, each person is effectively reviewing the work of the others.

![]() The most obvious benefit of this practice is the great strength and flexibility that would exist within a department because everyone is capable of doing all the jobs. Although the purpose for the practice is rooted in security, you gain an additional business benefit from this breadth of experience of the personnel.

The most obvious benefit of this practice is the great strength and flexibility that would exist within a department because everyone is capable of doing all the jobs. Although the purpose for the practice is rooted in security, you gain an additional business benefit from this breadth of experience of the personnel.

Trusted Recovery

![]() One of the easiest ways to compromise a system is to make the system restart and compromise it before all of its defenses can be reloaded. For this reason, trusted recovery is a principle of operations security. The trust recovery principle states that you must expect that systems and individuals will experience a failure at some time and you must prepare for this failure. Because you anticipate the failure, you can have a recovery plan for both systems and personnel available and implemented. The most common way to prepare for failure is to back up data on a regular basis.

One of the easiest ways to compromise a system is to make the system restart and compromise it before all of its defenses can be reloaded. For this reason, trusted recovery is a principle of operations security. The trust recovery principle states that you must expect that systems and individuals will experience a failure at some time and you must prepare for this failure. Because you anticipate the failure, you can have a recovery plan for both systems and personnel available and implemented. The most common way to prepare for failure is to back up data on a regular basis.

![]() Backing up data is a normal occurrence in most IT departments and is commonly performed by junior-level staff. However, this is not a very secure operation because backup software uses an account that can bypass file security to back up the files. Therefore, junior-level staff members have access to files that they would ordinarily not be able to access. The same is true if these same junior staff members have the right to restore data.

Backing up data is a normal occurrence in most IT departments and is commonly performed by junior-level staff. However, this is not a very secure operation because backup software uses an account that can bypass file security to back up the files. Therefore, junior-level staff members have access to files that they would ordinarily not be able to access. The same is true if these same junior staff members have the right to restore data.

![]() Security professionals propose that a secure backup program contain some of the following practices:

Security professionals propose that a secure backup program contain some of the following practices:

-

A junior staff member is responsible for loading blank media.

A junior staff member is responsible for loading blank media. -

Backup software uses an account that is unknown to individuals to bypass file security.

Backup software uses an account that is unknown to individuals to bypass file security. -

A different staff member removes the backup media and securely stores it on site while being assisted by another member of the staff.

A different staff member removes the backup media and securely stores it on site while being assisted by another member of the staff. -

A separate copy of the backup is stored off site and is handled by a third staff member who is accompanied by another staff member.

A separate copy of the backup is stored off site and is handled by a third staff member who is accompanied by another staff member.

| Note |

|

![]() Being prepared for system failure is an important part of operations security. The following are examples of things that help provide system recovery:

Being prepared for system failure is an important part of operations security. The following are examples of things that help provide system recovery:

-

Operating systems and applications that have single-user or safe mode help with system recovery.

Operating systems and applications that have single-user or safe mode help with system recovery. -

The ability to recover files that were open at the time of the problem helps ensure a smooth system recovery. The autosave process in many desktop applications is an example of this ability. A memory dump that many operating systems perform upon a system failure is also an example of this ability.

The ability to recover files that were open at the time of the problem helps ensure a smooth system recovery. The autosave process in many desktop applications is an example of this ability. A memory dump that many operating systems perform upon a system failure is also an example of this ability. -

The ability to retain the security settings of a file after a system crash is critical so that the security is not bypassed by forcing a crash.

The ability to retain the security settings of a file after a system crash is critical so that the security is not bypassed by forcing a crash. -

The ability to recover and retain security settings for critical key system files, such as the Registry, configuration files, password files, and so on, is critical for providing system recovery.

The ability to recover and retain security settings for critical key system files, such as the Registry, configuration files, password files, and so on, is critical for providing system recovery.

Change and Configuration Control

![]() The goal of change and configuration controls is to ensure that you use standardized methods and procedures to efficiently handle all changes. To make changes efficient, you should minimize the impact of change-related incidents and improve the day-to-day operations.

The goal of change and configuration controls is to ensure that you use standardized methods and procedures to efficiently handle all changes. To make changes efficient, you should minimize the impact of change-related incidents and improve the day-to-day operations.

![]() A change is defined as an event that results in a new status of one or more configuration items. A change should be approved by management, be cost-effective, and be an enhancement to business processes with a minimum risk to the IT infrastructure and security.

A change is defined as an event that results in a new status of one or more configuration items. A change should be approved by management, be cost-effective, and be an enhancement to business processes with a minimum risk to the IT infrastructure and security.

![]() The three major goals of change and configuration management are as follows:

The three major goals of change and configuration management are as follows:

-

Minimal system and network disruption

Minimal system and network disruption -

Preparation to reverse changes

Preparation to reverse changes -

More economic utilization of resources

More economic utilization of resources

![]() To accomplish configuration changes in an effective and safe manor, adhere to the following suggestions:

To accomplish configuration changes in an effective and safe manor, adhere to the following suggestions:

-

Ensure that the change is implemented in an orderly manner with formalized testing.

Ensure that the change is implemented in an orderly manner with formalized testing. -

Ensure that the end users are aware of the coming change (when necessary).

Ensure that the end users are aware of the coming change (when necessary). -

Analyze the effects of the change after it is implemented.

Analyze the effects of the change after it is implemented. -

Reduce the potential negative impact on performance or security, or both.

Reduce the potential negative impact on performance or security, or both.

![]() Although the change control process differs from organization to organization, certain patterns emerge in change management. The following are steps in a typical change control process:

Although the change control process differs from organization to organization, certain patterns emerge in change management. The following are steps in a typical change control process:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Network Security Testing

Network Security Testing

![]() Security testing provides insight into the other SDLC activities such as risk analysis and contingency planning. You should document security testing and make them available for staff involved in other IT and security related areas. Typically, you conduct network security testing during the implementation and operational stages, after the system has been developed, installed, and integrated.

Security testing provides insight into the other SDLC activities such as risk analysis and contingency planning. You should document security testing and make them available for staff involved in other IT and security related areas. Typically, you conduct network security testing during the implementation and operational stages, after the system has been developed, installed, and integrated.

![]() During the implementation stage, you should conduct security testing and evaluation on specific parts of the system and on the entire system as a whole. Security test and evaluation (ST&E) is an examination or analysis of the protective measures that are placed on an information system after it is fully integrated and operational. The following are the objectives of the ST&E:

During the implementation stage, you should conduct security testing and evaluation on specific parts of the system and on the entire system as a whole. Security test and evaluation (ST&E) is an examination or analysis of the protective measures that are placed on an information system after it is fully integrated and operational. The following are the objectives of the ST&E:

-

Uncover design, implementation, and operational flaws that could allow the violation of the security policy

Uncover design, implementation, and operational flaws that could allow the violation of the security policy -

Determine the adequacy of security mechanisms, assurances, and other properties to enforce the security policy

Determine the adequacy of security mechanisms, assurances, and other properties to enforce the security policy -

Assess the degree of consistency between the system documentation and its implementation

Assess the degree of consistency between the system documentation and its implementation

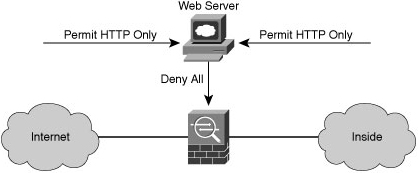

![]() Once a system is operational, it is important to ascertain its operational status. You can conduct many tests to assess the operational status of the system. The types of tests you use and the frequency in which you conduct them depend on the importance of the system and the resources available for testing. You should repeat these tests periodically and whenever you make a major change to the system. For systems that are exposed to constant threat, such as web servers, or systems that protect critical information, such as firewalls, you should conduct tests more frequently.

Once a system is operational, it is important to ascertain its operational status. You can conduct many tests to assess the operational status of the system. The types of tests you use and the frequency in which you conduct them depend on the importance of the system and the resources available for testing. You should repeat these tests periodically and whenever you make a major change to the system. For systems that are exposed to constant threat, such as web servers, or systems that protect critical information, such as firewalls, you should conduct tests more frequently.

Security Testing Techniques

![]() You can use security testing results in the following ways:

You can use security testing results in the following ways:

-

As a reference point for corrective action

As a reference point for corrective action -

To define mitigation activities to address identified vulnerabilities

To define mitigation activities to address identified vulnerabilities -

As a benchmark to trace the progress of an organization in meeting security requirements

As a benchmark to trace the progress of an organization in meeting security requirements -

To assess the implementation status of system security requirements

To assess the implementation status of system security requirements -

To conduct cost and benefit analysis for improvements to system security

To conduct cost and benefit analysis for improvements to system security -

To enhance other life cycle activities, such as risk assessments, certification and authorization (C&A), and performance-improvement efforts

To enhance other life cycle activities, such as risk assessments, certification and authorization (C&A), and performance-improvement efforts

![]() There are several different types of security testing. Some testing techniques are predominantly manual, and other tests are highly automated. Regardless of the type of testing, the staff that sets up and conducts the security testing should have significant security and networking knowledge, including significant expertise in the following areas: network security, firewalls, IPSs, operating systems, programming, and networking protocols, such as TCP/IP.

There are several different types of security testing. Some testing techniques are predominantly manual, and other tests are highly automated. Regardless of the type of testing, the staff that sets up and conducts the security testing should have significant security and networking knowledge, including significant expertise in the following areas: network security, firewalls, IPSs, operating systems, programming, and networking protocols, such as TCP/IP.

![]() Many testing techniques are available, including the following:

Many testing techniques are available, including the following:

-

Network scanning

Network scanning -

Vulnerability scanning

Vulnerability scanning -

Password cracking

Password cracking -

Log review

Log review -

Integrity checkers

Integrity checkers -

Virus detection

Virus detection -

War dialing

War dialing -

War driving (802.11 or wireless LAN testing)

War driving (802.11 or wireless LAN testing) -

Penetration testing

Penetration testing

Common Testing Tools

![]() Many testing tools are available in the modern marketplace that you can use to test the security of your systems and networks. The following list is a collection of tools that are quite popular; some of the tools are freeware, some are not:

Many testing tools are available in the modern marketplace that you can use to test the security of your systems and networks. The following list is a collection of tools that are quite popular; some of the tools are freeware, some are not:

| Note |

|

![]() Some testing tools are actually hacking tools. Why not try on your network tools before a hacker does? Find the weaknesses on your network before the hacker does and before he exploits them. So, look at two tools that are commonly used.

Some testing tools are actually hacking tools. Why not try on your network tools before a hacker does? Find the weaknesses on your network before the hacker does and before he exploits them. So, look at two tools that are commonly used.

![]() Nmap is the best-known low-level scanner available to the public. It is simple to use and has an array of excellent features that you can use for network mapping and reconnaissance. The basic functionality of Nmap enables the user to do the following:

Nmap is the best-known low-level scanner available to the public. It is simple to use and has an array of excellent features that you can use for network mapping and reconnaissance. The basic functionality of Nmap enables the user to do the following:

-

Perform classic TCP and UDP port scanning (looking for different services on one host) and sweeping (looking for the same service on multiple systems)

Perform classic TCP and UDP port scanning (looking for different services on one host) and sweeping (looking for the same service on multiple systems) -

Perform stealth port scans and sweeps, which are hard to detect by the target host or IPSs

Perform stealth port scans and sweeps, which are hard to detect by the target host or IPSs -

Identify remote operating systems, known as OS fingerprinting, through its TCP idiosyncrasies

Identify remote operating systems, known as OS fingerprinting, through its TCP idiosyncrasies

![]() Advanced features of Nmap include protocol scanning, known as Layer 3 port scanning, which can identify Layer 3 protocol support on a host, such as generic routing encapsulation (GRE) support and Open Shortest Path First (OSPF) support, using decoy hosts on the same LAN to mask your identity.

Advanced features of Nmap include protocol scanning, known as Layer 3 port scanning, which can identify Layer 3 protocol support on a host, such as generic routing encapsulation (GRE) support and Open Shortest Path First (OSPF) support, using decoy hosts on the same LAN to mask your identity.

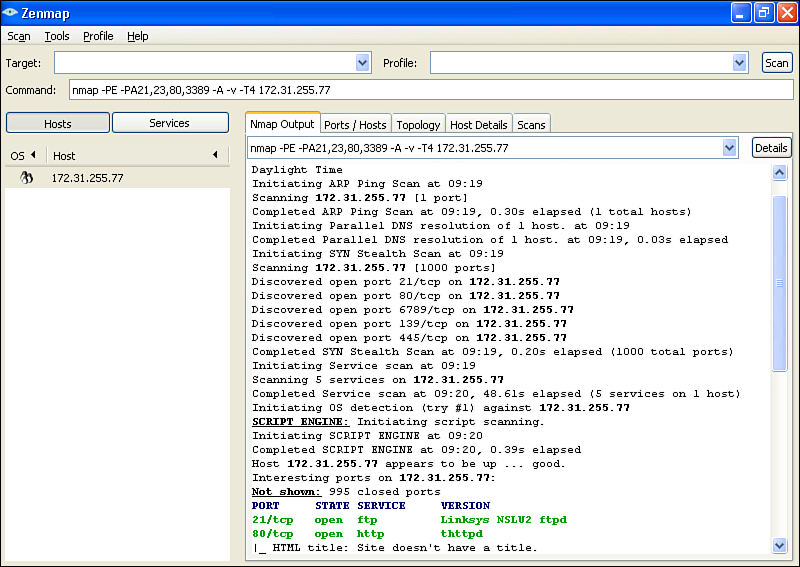

![]() Figure 1-18 shows a screen output of ZENMAP, the GUI for Nmap Security Scanner.

Figure 1-18 shows a screen output of ZENMAP, the GUI for Nmap Security Scanner.

| Tip |

|

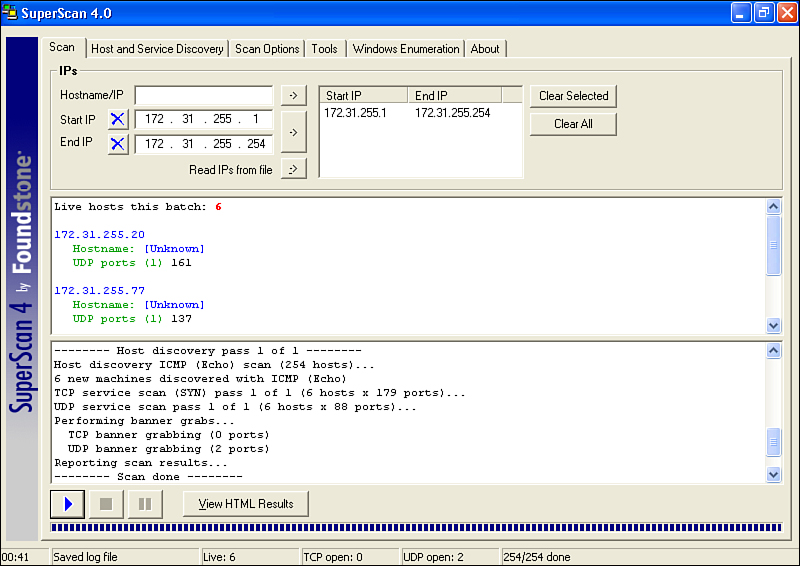

![]() SuperScan Version 4 is an update of the highly popular Microsoft Windows port scanning tool, SuperScan. It runs only on Windows XP and Windows 2000 and requires administrator privileges to run. Windows XP SP2 has removed support for raw sockets, which limits the capability of SuperScan and other scanning tools. Some functionality can be restored by entering the net stop SharedAccess command at the Windows command prompt.

SuperScan Version 4 is an update of the highly popular Microsoft Windows port scanning tool, SuperScan. It runs only on Windows XP and Windows 2000 and requires administrator privileges to run. Windows XP SP2 has removed support for raw sockets, which limits the capability of SuperScan and other scanning tools. Some functionality can be restored by entering the net stop SharedAccess command at the Windows command prompt.

![]() The following are some of the features of SuperScan Version 4:

The following are some of the features of SuperScan Version 4:

-

Adjustable scanning speed

Adjustable scanning speed -

Support for unlimited IP ranges

Support for unlimited IP ranges -

Improved host detection using multiple ICMP methods

Improved host detection using multiple ICMP methods -

TCP SYN scanning

TCP SYN scanning -

UDP scanning (two methods)

UDP scanning (two methods) -

Source port scanning

Source port scanning -

Fast hostname resolving

Fast hostname resolving -

Extensive banner grabbing

Extensive banner grabbing -

Massive built-in port list description database

Massive built-in port list description database -

IP and port scan order randomization

IP and port scan order randomization -

A selection of useful tools (ping, traceroute, Whois, and so on)

A selection of useful tools (ping, traceroute, Whois, and so on) -

Extensive Windows host enumeration capability

Extensive Windows host enumeration capability

![]() Figure 1-19 shows a screen capture of SuperScan results.

Figure 1-19 shows a screen capture of SuperScan results.

| Note |

|

Disaster Recovery and Business Continuity Planning

Disaster Recovery and Business Continuity Planning

![]() Business continuity planning and disaster recovery procedures address the continuing operations of an organization in the event of a disaster or prolonged service interruption that affects the mission of the organization. Such plans should address an emergency response phase, a recovery phase, and a return to normal operation phase. You should identify the responsibilities of personnel and the available resources during an incident. In reality, contingency and disaster recovery plans do not address every possible scenario or assumption. Rather, they focus on the events most likely to occur and identify an acceptable method of recovery. Periodically, you should exercise the plans and procedures to ensure that they are effective and well understood.

Business continuity planning and disaster recovery procedures address the continuing operations of an organization in the event of a disaster or prolonged service interruption that affects the mission of the organization. Such plans should address an emergency response phase, a recovery phase, and a return to normal operation phase. You should identify the responsibilities of personnel and the available resources during an incident. In reality, contingency and disaster recovery plans do not address every possible scenario or assumption. Rather, they focus on the events most likely to occur and identify an acceptable method of recovery. Periodically, you should exercise the plans and procedures to ensure that they are effective and well understood.

![]() Business continuity planning provides a short- to medium-term framework to continue the organizational operations. The following are objectives of business continuity planning:

Business continuity planning provides a short- to medium-term framework to continue the organizational operations. The following are objectives of business continuity planning:

-

Moving or relocating critical business components and people to a remote location while the original location is being repaired

Moving or relocating critical business components and people to a remote location while the original location is being repaired -

Using different channels of communication to deal with customers, shareholders, and partners until operations return to normal

Using different channels of communication to deal with customers, shareholders, and partners until operations return to normal

![]() Disaster recovery is the process of regaining access to the data, hardware, and software necessary to resume critical business operations after a natural or human-induced disaster. A disaster recovery plan should also include plans for coping with the unexpected or sudden loss of key personnel. A disaster recovery plan is part of a larger process known as business continuity planning.

Disaster recovery is the process of regaining access to the data, hardware, and software necessary to resume critical business operations after a natural or human-induced disaster. A disaster recovery plan should also include plans for coping with the unexpected or sudden loss of key personnel. A disaster recovery plan is part of a larger process known as business continuity planning.

![]() After the events of September 11, 2001, when many companies lost irreplaceable data, the effort put into protecting that data has changed. It is believed that some companies spend up to 25 percent of their IT budget on disaster recovery planning to avoid larger losses. Research indicates that of companies that had a major loss of computerized records, 43 percent never reopen, 51 percent close within two years, and only 6 percent survive long term.

After the events of September 11, 2001, when many companies lost irreplaceable data, the effort put into protecting that data has changed. It is believed that some companies spend up to 25 percent of their IT budget on disaster recovery planning to avoid larger losses. Research indicates that of companies that had a major loss of computerized records, 43 percent never reopen, 51 percent close within two years, and only 6 percent survive long term.

![]() Not all disruptions to business operations are equal. Whether the disruption is natural or human, intentional or unintentional, the effect is the same. A good disaster recovery plan takes into account the magnitude of the disruption, recognizing that there are differences between catastrophes, disasters, and nondisasters. In each case, a disruption occurs, but the scale of that disruption can dramatically differ.

Not all disruptions to business operations are equal. Whether the disruption is natural or human, intentional or unintentional, the effect is the same. A good disaster recovery plan takes into account the magnitude of the disruption, recognizing that there are differences between catastrophes, disasters, and nondisasters. In each case, a disruption occurs, but the scale of that disruption can dramatically differ.

| Key Topic |

|

![]() Generally, a nondisaster is a situation in which business operations are interrupted for a relatively short period of time. Disasters cause interruptions of at least a day, sometimes longer. The significant detail in a disaster is that the facilities are not 100 percent destroyed. In a catastrophe, the facilities are destroyed, and all operations must be moved.

Generally, a nondisaster is a situation in which business operations are interrupted for a relatively short period of time. Disasters cause interruptions of at least a day, sometimes longer. The significant detail in a disaster is that the facilities are not 100 percent destroyed. In a catastrophe, the facilities are destroyed, and all operations must be moved.

![]() The only way to deal with destruction is redundancy. When a component is destroyed, it must be replaced with a redundant component. When service is disrupted, it must be insured with a service level agreement (SLA) wherein some compensation is acquired for the disruption in service. And when a facility is destroyed, there must be a redundant facility. Without redundancy, it is impossible to recover from destruction.

The only way to deal with destruction is redundancy. When a component is destroyed, it must be replaced with a redundant component. When service is disrupted, it must be insured with a service level agreement (SLA) wherein some compensation is acquired for the disruption in service. And when a facility is destroyed, there must be a redundant facility. Without redundancy, it is impossible to recover from destruction.

![]() Redundant facilities are referred to as hot, warm, and cold sites. Each of these is available for a different price, with different resulting downtimes.

Redundant facilities are referred to as hot, warm, and cold sites. Each of these is available for a different price, with different resulting downtimes.

![]() In the case of a hot site, a completely redundant facility is acquired with almost identical equipment. The copying of data to this redundant facility is part of normal operations, so that in the case of a catastrophe, only the latest changes of data must be applied so that full operations are restored. With enough money spent in preparation for a catastrophe, this recovery can take as little as a few minutes or even seconds.

In the case of a hot site, a completely redundant facility is acquired with almost identical equipment. The copying of data to this redundant facility is part of normal operations, so that in the case of a catastrophe, only the latest changes of data must be applied so that full operations are restored. With enough money spent in preparation for a catastrophe, this recovery can take as little as a few minutes or even seconds.

| Tip |

|

![]() Warm sites are physically redundant facilities without the software and data standing by. Overnight replication would not occur in these instances, necessitating a disaster recovery team to physically go to the redundant facility and bring it up. Depending on how much software and how much data is involved, it can take days to resume operations.

Warm sites are physically redundant facilities without the software and data standing by. Overnight replication would not occur in these instances, necessitating a disaster recovery team to physically go to the redundant facility and bring it up. Depending on how much software and how much data is involved, it can take days to resume operations.

![]() A cold site is usually an empty data center with racks, power, WAN links, and heating, ventilation, and air conditioning (HVAC) already present, but no equipment. In this case, an organization would have to first acquire routers, switches, firewalls, servers, and so on to rebuild everything. Once you restore the backups to the new machines, operations can continue. This option is the least expensive in terms of money spent annually, but would usually take weeks to resume full operations.

A cold site is usually an empty data center with racks, power, WAN links, and heating, ventilation, and air conditioning (HVAC) already present, but no equipment. In this case, an organization would have to first acquire routers, switches, firewalls, servers, and so on to rebuild everything. Once you restore the backups to the new machines, operations can continue. This option is the least expensive in terms of money spent annually, but would usually take weeks to resume full operations.

Understanding and Developing a Comprehensive Network Security Policy

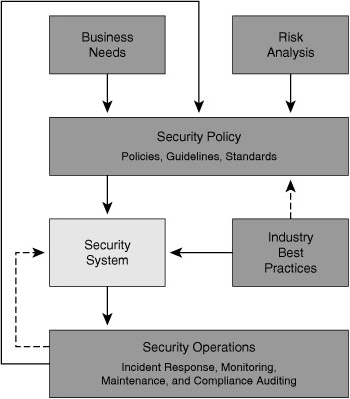

![]() It is important to know that the security policy developed in your organization drives all the steps taken to secure network resources. The development of a comprehensive security policy prepares you for the rest of this course.

It is important to know that the security policy developed in your organization drives all the steps taken to secure network resources. The development of a comprehensive security policy prepares you for the rest of this course.

![]() To create an effective security policy, it is necessary to also do a risk analysis to maximize the effectiveness of the policy. Also, it is essential that everyone be aware of the policy; otherwise, it is doomed to fail.

To create an effective security policy, it is necessary to also do a risk analysis to maximize the effectiveness of the policy. Also, it is essential that everyone be aware of the policy; otherwise, it is doomed to fail.

Security Policy Overview

Security Policy Overview

![]() Every organization has something that someone else wants. Someone might want that something for himself, or he might want the satisfaction of denying something to its rightful owner. Your assets are what need the protection of a security policy.

Every organization has something that someone else wants. Someone might want that something for himself, or he might want the satisfaction of denying something to its rightful owner. Your assets are what need the protection of a security policy.

![]() Determine what your assets are by asking (and answering) the following questions:

Determine what your assets are by asking (and answering) the following questions:

-

What do you have that others want?

What do you have that others want? -

What processes, data, or information systems are critical to you, your company, or your organization?

What processes, data, or information systems are critical to you, your company, or your organization? -

What would stop your company or organization from doing business or fulfilling its mission?

What would stop your company or organization from doing business or fulfilling its mission?

![]() The answers identify assets ranging from critical databases, vital applications, vital company customer and employee information, classified commercial information, shared drives, email servers, and web servers.

The answers identify assets ranging from critical databases, vital applications, vital company customer and employee information, classified commercial information, shared drives, email servers, and web servers.

![]() A security policy is a set of objectives for the company, rules of behavior for users and administrators, and requirements for system and management that collectively ensure the security of network and computer systems in an organization. A security policy is a “living document,” meaning that the document is never finished and is continuously updated as technology and employee requirements change.

A security policy is a set of objectives for the company, rules of behavior for users and administrators, and requirements for system and management that collectively ensure the security of network and computer systems in an organization. A security policy is a “living document,” meaning that the document is never finished and is continuously updated as technology and employee requirements change.

![]() The security policy translates, clarifies, and communicates the management position on security as defined in high-level security principles. The security policies act as a bridge between these management objectives and specific security requirements. The security policy informs users, staff, and managers of their obligatory requirements for protecting technology and information assets. The security policy should specify the mechanisms that you need to meet these requirements. The security policy also provides a baseline from which to acquire, configure, and audit computer systems and networks for compliance with the security policy. Therefore, an attempt to use a set of security tools in the absence of at least an implied security policy is meaningless.

The security policy translates, clarifies, and communicates the management position on security as defined in high-level security principles. The security policies act as a bridge between these management objectives and specific security requirements. The security policy informs users, staff, and managers of their obligatory requirements for protecting technology and information assets. The security policy should specify the mechanisms that you need to meet these requirements. The security policy also provides a baseline from which to acquire, configure, and audit computer systems and networks for compliance with the security policy. Therefore, an attempt to use a set of security tools in the absence of at least an implied security policy is meaningless.

| Key Topic |

|

![]() One of the most common security policy components is an acceptable use policy (AUP). This component defines what users are allowed and not allowed to do on the various components of the system, including the type of traffic that is allowed on the networks. The AUP should be as explicit as possible to avoid ambiguity or misunderstanding. For example, an AUP might list the prohibited website categories.

One of the most common security policy components is an acceptable use policy (AUP). This component defines what users are allowed and not allowed to do on the various components of the system, including the type of traffic that is allowed on the networks. The AUP should be as explicit as possible to avoid ambiguity or misunderstanding. For example, an AUP might list the prohibited website categories.

| Note |

|

![]() The audience for the security policy should be anyone who might have access to your network, including employees, contractors, suppliers, and customers. However, the security policy should treat each of these groups differently.

The audience for the security policy should be anyone who might have access to your network, including employees, contractors, suppliers, and customers. However, the security policy should treat each of these groups differently.

![]() The audience determines the content of the policy. For example, you probably do not need to include a description of why something is necessary in a policy that is intended for the technical staff. You can assume that the technical staff already knows why a particular requirement is included. Managers are also not likely to be interested in the technical aspects of why a particular requirement is needed. However, they might want the high-level overview or the principles supporting the requirement. When end users know why a particular security control has been included, they are more likely to comply with the policy.

The audience determines the content of the policy. For example, you probably do not need to include a description of why something is necessary in a policy that is intended for the technical staff. You can assume that the technical staff already knows why a particular requirement is included. Managers are also not likely to be interested in the technical aspects of why a particular requirement is needed. However, they might want the high-level overview or the principles supporting the requirement. When end users know why a particular security control has been included, they are more likely to comply with the policy.

![]() One document will not likely meet the needs of the entire audience of a large organization. The goal is to ensure that the information security policy documents are coherent with its audience needs.

One document will not likely meet the needs of the entire audience of a large organization. The goal is to ensure that the information security policy documents are coherent with its audience needs.

Security Policy Components

Security Policy Components

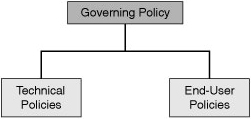

![]() Figure 1-20 shows the hierarchy of a corporate policy structure that is aimed at effectively meeting the needs of all audiences.

Figure 1-20 shows the hierarchy of a corporate policy structure that is aimed at effectively meeting the needs of all audiences.

![]() Most corporations should use a suite of policy documents to meet their wide and varied needs:

Most corporations should use a suite of policy documents to meet their wide and varied needs:

-

Governing policy: This policy is a high-level treatment of security concepts that are important to the company. Managers and technical custodians are the intended audience. The governing policy controls all security-related interaction among business units and supporting departments in the company. In terms of detail, the governing policy answers the “what” security policy questions.

Governing policy: This policy is a high-level treatment of security concepts that are important to the company. Managers and technical custodians are the intended audience. The governing policy controls all security-related interaction among business units and supporting departments in the company. In terms of detail, the governing policy answers the “what” security policy questions. -

End-user policies: This document covers all security topics important to end users. In terms of detail level, end-user policies answer the “what,” “who,” “when,” and “where” security policy questions at an appropriate level of detail for an end user.

End-user policies: This document covers all security topics important to end users. In terms of detail level, end-user policies answer the “what,” “who,” “when,” and “where” security policy questions at an appropriate level of detail for an end user. -

Technical policies: Security staff members use technical policies as they carry out their security responsibilities for the system. These policies are more detailed than the governing policy and are system or issue specific (for example, access control or physical security issues). In terms of detail, technical policies answer the “what,” the “who,” the “when,” and the “where” security policy questions. The “why” is left to the owner of the information.

Technical policies: Security staff members use technical policies as they carry out their security responsibilities for the system. These policies are more detailed than the governing policy and are system or issue specific (for example, access control or physical security issues). In terms of detail, technical policies answer the “what,” the “who,” the “when,” and the “where” security policy questions. The “why” is left to the owner of the information.

| Note |

|

Governing Policy

![]() The governing policy outlines the security concepts that are important to the company for managers and technical custodians:

The governing policy outlines the security concepts that are important to the company for managers and technical custodians:

-

The governing policy controls all security-related interactions among business units and supporting departments in the company.

The governing policy controls all security-related interactions among business units and supporting departments in the company. -

The governing policy aligns closely with existing company policies, especially human resource policies, but also any other policy that mentions security-related issues such as email, computer use, or related IT subjects.

The governing policy aligns closely with existing company policies, especially human resource policies, but also any other policy that mentions security-related issues such as email, computer use, or related IT subjects. -

The governing policy is placed at the same level as all companywide policies.

The governing policy is placed at the same level as all companywide policies. -

The governing policy supports the technical and end-user policies.

The governing policy supports the technical and end-user policies.

![]() A governing policy includes the following key components:

A governing policy includes the following key components:

-

A statement of the issue that the policy addresses

A statement of the issue that the policy addresses -

A statement about your position as IT manager on the policy

A statement about your position as IT manager on the policy -

How the policy applies in the environment

How the policy applies in the environment -

The roles and responsibilities of those affected by the policy

The roles and responsibilities of those affected by the policy -

What level of compliance to the policy is necessary

What level of compliance to the policy is necessary -

Which actions, activities, and processes are allowed and which are not

Which actions, activities, and processes are allowed and which are not -

What the consequences of noncompliance are

What the consequences of noncompliance are

End-User Policies

![]() The end-user policy is a single policy document that covers all the policy topics pertaining to information security that end users should know about, comply with, and implement. This policy may overlap with the technical policies and is at the same level as a technical policy. Grouping all the end-user policies together means that users have to go to only one place and read one document to learn everything that they need to do to ensure compliance with the company security policy.

The end-user policy is a single policy document that covers all the policy topics pertaining to information security that end users should know about, comply with, and implement. This policy may overlap with the technical policies and is at the same level as a technical policy. Grouping all the end-user policies together means that users have to go to only one place and read one document to learn everything that they need to do to ensure compliance with the company security policy.

Technical Policies

![]() Security staff members use the technical policies in the conduct of their daily security responsibilities. These policies are more detailed than the governing policy and are system or issue specific (for example, router security or physical security issues). These policies are essentially security handbooks that describe what the security staff does, but not how the security staff performs its functions.

Security staff members use the technical policies in the conduct of their daily security responsibilities. These policies are more detailed than the governing policy and are system or issue specific (for example, router security or physical security issues). These policies are essentially security handbooks that describe what the security staff does, but not how the security staff performs its functions.

![]() The following are typical policy categories for technical policies:

The following are typical policy categories for technical policies:

-

General policies

General policies -

AUP: Defines the acceptable use of equipment and computing services, and the appropriate security measures that employees should take to protect the corporate resources and proprietary information.

AUP: Defines the acceptable use of equipment and computing services, and the appropriate security measures that employees should take to protect the corporate resources and proprietary information. -

Account access request policy: Formalizes the account and access request process within the organization. Users and system administrators who bypass the standard processes for account and access requests can cause legal action against the organization.

Account access request policy: Formalizes the account and access request process within the organization. Users and system administrators who bypass the standard processes for account and access requests can cause legal action against the organization. -

Acquisition assessment policy: Defines the responsibilities regarding corporate acquisitions and defines the minimum requirements that the information security group must complete for an acquisition assessment.

Acquisition assessment policy: Defines the responsibilities regarding corporate acquisitions and defines the minimum requirements that the information security group must complete for an acquisition assessment. -

Audit policy: Conducts audits and risk assessments to ensure integrity of information and resources, investigates incidents, ensures conformance to security policies, or monitors user and system activity where appropriate.

Audit policy: Conducts audits and risk assessments to ensure integrity of information and resources, investigates incidents, ensures conformance to security policies, or monitors user and system activity where appropriate. -

Information sensitivity policy: Defines the requirements for classifying and securing information in a manner appropriate to its sensitivity level.

Information sensitivity policy: Defines the requirements for classifying and securing information in a manner appropriate to its sensitivity level. -

Password policy: Defines the standards for creating, protecting, and changing strong passwords.

Password policy: Defines the standards for creating, protecting, and changing strong passwords. -

Risk-assessment policy: Defines the requirements and provides the authority for the information security team to identify, assess, and remediate risks to the information infrastructure that is associated with conducting business.

Risk-assessment policy: Defines the requirements and provides the authority for the information security team to identify, assess, and remediate risks to the information infrastructure that is associated with conducting business. -

Global web server policy: Defines the standards that are required by all web hosts.

Global web server policy: Defines the standards that are required by all web hosts.

-

-

-

Automatically forwarded email policy: Documents the policy restricting automatic email forwarding to an external destination without prior approval from the appropriate manager or director.

Automatically forwarded email policy: Documents the policy restricting automatic email forwarding to an external destination without prior approval from the appropriate manager or director. -

Email policy: Defines the standards to prevent tarnishing the public image of the organization.

Email policy: Defines the standards to prevent tarnishing the public image of the organization. -

Spam policy: The AUP covers spam.

Spam policy: The AUP covers spam.

-

-

Remote-access policies

Remote-access policies -

Dial-in access policy: Defines the appropriate dial-in access and its use by authorized personnel.

Dial-in access policy: Defines the appropriate dial-in access and its use by authorized personnel. -

Remote-access policy: Defines the standards for connecting to the organization network from any host or network external to the organization.

Remote-access policy: Defines the standards for connecting to the organization network from any host or network external to the organization. -

VPN security policy: Defines the requirements for remote-access IP Security (IPsec) or Layer 2 Tunneling Protocol (L2TP) VPN connections to the organization network.

VPN security policy: Defines the requirements for remote-access IP Security (IPsec) or Layer 2 Tunneling Protocol (L2TP) VPN connections to the organization network.

-

-

Telephony policies

Telephony policies -

Analog and ISDN line policy: Defines the standards to use analog and ISDN lines for sending and receiving faxes and for connection to computers.

Analog and ISDN line policy: Defines the standards to use analog and ISDN lines for sending and receiving faxes and for connection to computers. -

Personal communication device policy: Defines the information security’s requirements for personal communication devices, such as voicemail, IP phones, softphones, and so on.

Personal communication device policy: Defines the information security’s requirements for personal communication devices, such as voicemail, IP phones, softphones, and so on.

-

-

Application policies

Application policies -

Acceptable encryption policy: Defines the requirements for encryption algorithms that are used within the organization.

Acceptable encryption policy: Defines the requirements for encryption algorithms that are used within the organization. -

Application service provider (ASP) policy: Defines the minimum security criteria that an ASP must execute before the organization uses them on a project.

Application service provider (ASP) policy: Defines the minimum security criteria that an ASP must execute before the organization uses them on a project. -

Database credentials coding policy: Defines the requirements for securely storing and retrieving database usernames and passwords.

Database credentials coding policy: Defines the requirements for securely storing and retrieving database usernames and passwords. -

Interprocess communications policy: Defines the security requirements that any two or more processes must meet when they communicate with each other using a network socket or operating system socket.

Interprocess communications policy: Defines the security requirements that any two or more processes must meet when they communicate with each other using a network socket or operating system socket. -

Project security policy: Defines requirements for project managers to review all projects for possible security requirements.

Project security policy: Defines requirements for project managers to review all projects for possible security requirements. -

Source code protection policy: Establishes minimum information security requirements for managing product source code.

Source code protection policy: Establishes minimum information security requirements for managing product source code.

-

-

Network policies

Network policies -

Extranet policy: Defines the requirement that third-party organizations that need access to the organization networks must sign a third-party connection agreement.

Extranet policy: Defines the requirement that third-party organizations that need access to the organization networks must sign a third-party connection agreement. -

Minimum requirements for network access policy: Defines the standards and requirements for any device that requires connectivity to the internal network.

Minimum requirements for network access policy: Defines the standards and requirements for any device that requires connectivity to the internal network. -

Network access standards: Defines the standards for secure physical port access for all wired and wireless network data ports.

Network access standards: Defines the standards for secure physical port access for all wired and wireless network data ports. -

Router and switch security policy: Defines the minimal security configuration standards for routers and switches inside a company production network or used in a production capacity.

Router and switch security policy: Defines the minimal security configuration standards for routers and switches inside a company production network or used in a production capacity. -

Server security policy: Defines the minimal security configuration standards for servers inside a company production network or used in a production capacity.

Server security policy: Defines the minimal security configuration standards for servers inside a company production network or used in a production capacity.

-

-

Wireless communication policy: Defines standards for wireless systems that are used to connect to the organization networks.

Wireless communication policy: Defines standards for wireless systems that are used to connect to the organization networks. -

Document Retention policy: Defines the minimal systematic review, retention, and destruction of documents received or created during the course of business. The categories of retention policy are, among others:

Document Retention policy: Defines the minimal systematic review, retention, and destruction of documents received or created during the course of business. The categories of retention policy are, among others:-

Electronic communication retention policy: Defines standards for the retention of email and instant messaging.

Electronic communication retention policy: Defines standards for the retention of email and instant messaging. -

Financial retention policy: Defines standards for the retention of bank statements, annual reports, pay records, accounts payable and receivable, and so on.

Financial retention policy: Defines standards for the retention of bank statements, annual reports, pay records, accounts payable and receivable, and so on. -

Employee records retention policy: Defines standards for the retention of employee personal records.

Employee records retention policy: Defines standards for the retention of employee personal records. -

Operation records retention policy: Defines standards for the retention of past inventories information, training manuals, suppliers lists, and so forth.