![]() This chapter describes a hierarchical modular design approach called multilayer design. This chapter examines the designs of the Enterprise Campus and the Enterprise Data Center network infrastructures. First, it addresses general campus design considerations, followed by a discussion of the design of each of the modules and layers within the Enterprise Campus. The chapter concludes with an introduction to design considerations for the Enterprise Data Center.

This chapter describes a hierarchical modular design approach called multilayer design. This chapter examines the designs of the Enterprise Campus and the Enterprise Data Center network infrastructures. First, it addresses general campus design considerations, followed by a discussion of the design of each of the modules and layers within the Enterprise Campus. The chapter concludes with an introduction to design considerations for the Enterprise Data Center.

Campus Design Considerations

Campus Design Considerations

![]() The multilayer approach to campus network design combines data link layer and multilayer switching to achieve robust, highly available campus networks. This section discusses factors to consider in a Campus LAN design.

The multilayer approach to campus network design combines data link layer and multilayer switching to achieve robust, highly available campus networks. This section discusses factors to consider in a Campus LAN design.

Designing an Enterprise Campus

Designing an Enterprise Campus

![]() The Enterprise Campus network is the foundation for enabling business applications, enhancing productivity, and providing a multitude of services to end users. The following three characteristics should be considered when designing the campus network:

The Enterprise Campus network is the foundation for enabling business applications, enhancing productivity, and providing a multitude of services to end users. The following three characteristics should be considered when designing the campus network:

-

Network application characteristics: The organizational requirements, services, and applications place stringent requirements on a campus network solution—for example, in terms of bandwidth and delay.

Network application characteristics: The organizational requirements, services, and applications place stringent requirements on a campus network solution—for example, in terms of bandwidth and delay. -

Environmental characteristics: The network’s environment includes its geography and the transmission media used.

Environmental characteristics: The network’s environment includes its geography and the transmission media used.-

The physical environment of the building or buildings influences the design, as do the number of, distribution of, and distance between the network nodes (including end users, hosts, and network devices). Other factors include space, power, and heating, ventilation, and air conditioning support for the network devices.

The physical environment of the building or buildings influences the design, as do the number of, distribution of, and distance between the network nodes (including end users, hosts, and network devices). Other factors include space, power, and heating, ventilation, and air conditioning support for the network devices. -

Cabling is one of the biggest long-term investments in network deployment. Therefore, transmission media selection depends not only on the required bandwidth and distances, but also on the emerging technologies that might be deployed over the same infrastructure in the future.

Cabling is one of the biggest long-term investments in network deployment. Therefore, transmission media selection depends not only on the required bandwidth and distances, but also on the emerging technologies that might be deployed over the same infrastructure in the future.

-

-

Infrastructure device characteristics: The characteristics of the network devices selected influence the design (for example, they determine the network’s flexibility) and contribute to the overall delay. Trade-offs between data link layer switching—based on media access control (MAC) addresses—and multilayer switching—based on network layer addresses, transport layer, and application awareness—need to be considered.

Infrastructure device characteristics: The characteristics of the network devices selected influence the design (for example, they determine the network’s flexibility) and contribute to the overall delay. Trade-offs between data link layer switching—based on media access control (MAC) addresses—and multilayer switching—based on network layer addresses, transport layer, and application awareness—need to be considered.-

High availability and high throughput are requirements that might require consideration throughout the infrastructure.

High availability and high throughput are requirements that might require consideration throughout the infrastructure. -

Most Enterprise Campus designs use a combination of data link layer switching in the access layer and multilayer switching in the distribution and core layers.

Most Enterprise Campus designs use a combination of data link layer switching in the access layer and multilayer switching in the distribution and core layers.

-

![]() The following sections examine these factors.

The following sections examine these factors.

Network Application Characteristics and Considerations

Network Application Characteristics and Considerations

![]() The network application’s characteristics and requirements influence the design in many ways. The applications that are critical to the organization, and the network demands of these applications, determine enterprise traffic patterns inside the Enterprise Campus network, which influences bandwidth usage, response times, and the selection of the transmission medium.

The network application’s characteristics and requirements influence the design in many ways. The applications that are critical to the organization, and the network demands of these applications, determine enterprise traffic patterns inside the Enterprise Campus network, which influences bandwidth usage, response times, and the selection of the transmission medium.

![]() Different types of application communication result in varying network demands. The following sections review four types of application communication:

Different types of application communication result in varying network demands. The following sections review four types of application communication:

Peer-Peer Applications

![]() From the network designer’s perspective, peer-peer applications include applications in which the majority of network traffic passes from one network edge device to another through the organization’s network, as shown in Figure 4-1. Typical peer-peer applications include the following:

From the network designer’s perspective, peer-peer applications include applications in which the majority of network traffic passes from one network edge device to another through the organization’s network, as shown in Figure 4-1. Typical peer-peer applications include the following:

-

Instant messaging: After the connection is established, the conversation is directly between two peers.

Instant messaging: After the connection is established, the conversation is directly between two peers. -

IP phone calls: Two peers establish communication with the help of an IP telephony manager; however, the conversation occurs directly between the two peers when the connection is established. The network requirements of IP phone calls are strict because of the need for quality of service (QoS) treatment to minimize delay and variation in delay (jitter).

IP phone calls: Two peers establish communication with the help of an IP telephony manager; however, the conversation occurs directly between the two peers when the connection is established. The network requirements of IP phone calls are strict because of the need for quality of service (QoS) treatment to minimize delay and variation in delay (jitter).Note  QoS is discussed in the later section “QoS Considerations in LAN Switches.”

QoS is discussed in the later section “QoS Considerations in LAN Switches.” -

File sharing: Some operating systems and applications require direct access to data on other workstations.

File sharing: Some operating systems and applications require direct access to data on other workstations. -

Videoconference systems: Videoconferencing is similar to IP telephony; however, the network requirements are usually higher, particularly related to bandwidth consumption and QoS.

Videoconference systems: Videoconferencing is similar to IP telephony; however, the network requirements are usually higher, particularly related to bandwidth consumption and QoS.

Client–Local Server Applications

![]() Historically, clients and servers were attached to a network device on the same LAN segment and followed the 80/20 workgroup rule for client/server applications. This rule indicates that 80 percent of the traffic is local to the LAN segment and 20 percent leaves the segment.

Historically, clients and servers were attached to a network device on the same LAN segment and followed the 80/20 workgroup rule for client/server applications. This rule indicates that 80 percent of the traffic is local to the LAN segment and 20 percent leaves the segment.

![]() With increased traffic on the corporate network and a relatively fixed location for users, an organization might split the network into several isolated segments, as shown in Figure 4-2. Each of these segments has its own servers, known as local servers, for its application. In this scenario, servers and users are located in the same VLAN, and department administrators manage and control the servers. The majority of department traffic occurs in the same segment, but some data exchange (to a different VLAN) happens over the campus backbone. The bandwidth requirements for traffic passing to another segment typically are not crucial. For example, traffic to the Internet goes through a common segment and has lower performance requirements than traffic to the local segment servers.

With increased traffic on the corporate network and a relatively fixed location for users, an organization might split the network into several isolated segments, as shown in Figure 4-2. Each of these segments has its own servers, known as local servers, for its application. In this scenario, servers and users are located in the same VLAN, and department administrators manage and control the servers. The majority of department traffic occurs in the same segment, but some data exchange (to a different VLAN) happens over the campus backbone. The bandwidth requirements for traffic passing to another segment typically are not crucial. For example, traffic to the Internet goes through a common segment and has lower performance requirements than traffic to the local segment servers.

Client–Server Farm Applications

![]() Large organizations require their users to have fast, reliable, and controlled access to critical applications.

Large organizations require their users to have fast, reliable, and controlled access to critical applications.

![]() Because high-performance multilayer switches have an insignificant switch delay, and because of the reduced cost of network bandwidth, locating the servers centrally rather than in the workgroup is technically feasible and reduces support costs.

Because high-performance multilayer switches have an insignificant switch delay, and because of the reduced cost of network bandwidth, locating the servers centrally rather than in the workgroup is technically feasible and reduces support costs.

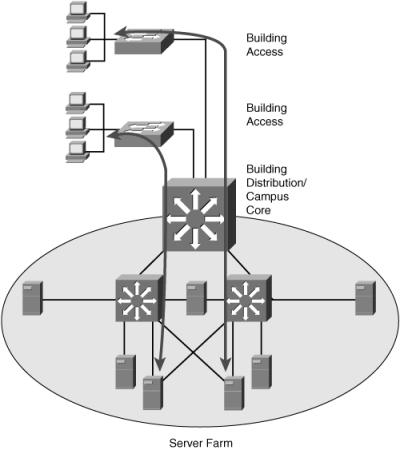

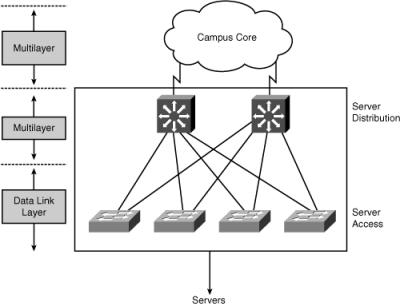

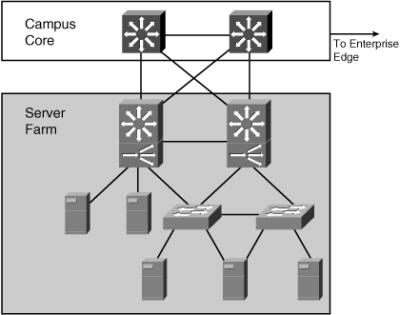

![]() To fulfill these demands and keep administrative costs down, the servers are located in a common Server Farm, as shown in Figure 4-3. Using a Server Farm requires a network infrastructure that is highly resilient (providing security) and redundant (providing high availability) and that provides adequate throughput. High-end LAN switches with the fastest LAN technologies, such as Gigabit Ethernet, are typically deployed in such an environment.

To fulfill these demands and keep administrative costs down, the servers are located in a common Server Farm, as shown in Figure 4-3. Using a Server Farm requires a network infrastructure that is highly resilient (providing security) and redundant (providing high availability) and that provides adequate throughput. High-end LAN switches with the fastest LAN technologies, such as Gigabit Ethernet, are typically deployed in such an environment.

![]() In a large organization, application traffic might have to pass across more than one wiring closet, LAN, or VLAN to reach servers in a Server Farm. Client–Server Farm applications apply the 20/80 rule, where only 20 percent of the traffic remains on the local LAN segment, and 80 percent leaves the segment to reach centralized servers, the Internet, and so on. Such applications include the following:

In a large organization, application traffic might have to pass across more than one wiring closet, LAN, or VLAN to reach servers in a Server Farm. Client–Server Farm applications apply the 20/80 rule, where only 20 percent of the traffic remains on the local LAN segment, and 80 percent leaves the segment to reach centralized servers, the Internet, and so on. Such applications include the following:

-

Organizational mail servers (such as Microsoft Exchange)

Organizational mail servers (such as Microsoft Exchange) -

Common file servers (such as Microsoft and Sun)

Common file servers (such as Microsoft and Sun) -

Common database servers for organizational applications (such as Oracle)

Common database servers for organizational applications (such as Oracle)

Client–Enterprise Edge Applications

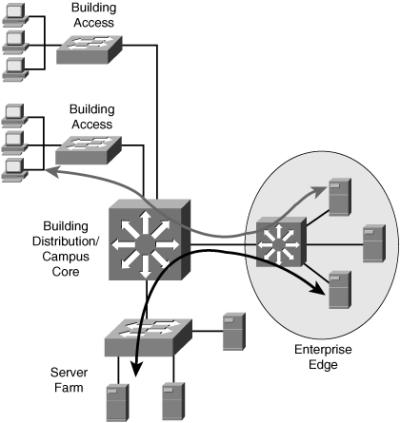

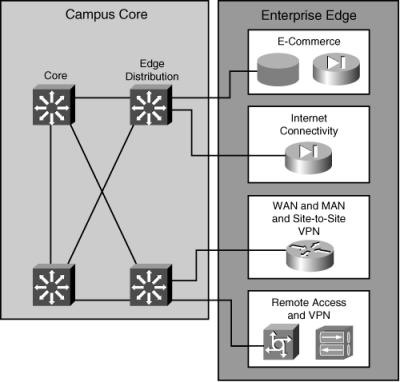

![]() As shown in Figure 4-4, client–Enterprise Edge applications use servers on the Enterprise Edge to exchange data between the organization and its public servers. The most important issues between the Enterprise Campus network and the Enterprise Edge are security and high availability; data exchange with external entities must be in constant operation. Applications installed on the Enterprise Edge can be crucial to organizational process flow; therefore, any outages can increase costs.

As shown in Figure 4-4, client–Enterprise Edge applications use servers on the Enterprise Edge to exchange data between the organization and its public servers. The most important issues between the Enterprise Campus network and the Enterprise Edge are security and high availability; data exchange with external entities must be in constant operation. Applications installed on the Enterprise Edge can be crucial to organizational process flow; therefore, any outages can increase costs.

![]() Typical Enterprise Edge applications are based on web technologies. Examples of these application types—such as external mail and DNS servers and public web servers—can be found in any organization.

Typical Enterprise Edge applications are based on web technologies. Examples of these application types—such as external mail and DNS servers and public web servers—can be found in any organization.

![]() Organizations that support their partnerships through e-commerce applications also place their e-commerce servers into the Enterprise Edge. Communication with these servers is vital because of the two-way replication of data. As a result, high redundancy and resiliency of the network, along with security, are the most important requirements for these applications.

Organizations that support their partnerships through e-commerce applications also place their e-commerce servers into the Enterprise Edge. Communication with these servers is vital because of the two-way replication of data. As a result, high redundancy and resiliency of the network, along with security, are the most important requirements for these applications.

Application Requirements

![]() Table 4-1 lists the types of application communication and compares their requirements with respect to some important network parameters. The following sections discuss these parameters.

Table 4-1 lists the types of application communication and compares their requirements with respect to some important network parameters. The following sections discuss these parameters.

|

|

|

|

|

| |

|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Connectivity

![]() The wide use of LAN switching at Layer 2 has revolutionized local-area networking and has resulted in increased performance and more bandwidth for satisfying the requirements of new organizational applications. LAN switches provide this performance benefit by increasing bandwidth and throughput for workgroups and local servers.

The wide use of LAN switching at Layer 2 has revolutionized local-area networking and has resulted in increased performance and more bandwidth for satisfying the requirements of new organizational applications. LAN switches provide this performance benefit by increasing bandwidth and throughput for workgroups and local servers.

| Note |

|

Throughput

![]() The required throughput varies from application to application. An application that exchanges data between users in the workgroup usually does not require a high throughput network infrastructure. However, organizational-level applications usually require a high-capacity link to the servers, which are usually located in the Server Farm.

The required throughput varies from application to application. An application that exchanges data between users in the workgroup usually does not require a high throughput network infrastructure. However, organizational-level applications usually require a high-capacity link to the servers, which are usually located in the Server Farm.

| Note |

|

![]() Applications located on servers in the Enterprise Edge are normally not as bandwidth-consuming as applications in the Server Farm, but they might require high availability and security features.

Applications located on servers in the Enterprise Edge are normally not as bandwidth-consuming as applications in the Server Farm, but they might require high availability and security features.

High Availability

![]() The high availability of an application is a function of the application and the entire network between a client workstation and a server located in the network. Although the network design primarily determines the network’s availability, the individual components’ mean time between failures (MTBF) is a factor. Redundancy in the Building Distribution and Campus Core layers is recommended.

The high availability of an application is a function of the application and the entire network between a client workstation and a server located in the network. Although the network design primarily determines the network’s availability, the individual components’ mean time between failures (MTBF) is a factor. Redundancy in the Building Distribution and Campus Core layers is recommended.

Total Network Cost

![]() Depending on the application and the resulting network infrastructure, the cost varies from low in a peer-peer environment to high in a network with redundancy in the Building Distribution, Campus Core, and Server Farm. In addition to the cost of duplicate components for redundancy, costs include the cables, routers, switches, software, and so forth.

Depending on the application and the resulting network infrastructure, the cost varies from low in a peer-peer environment to high in a network with redundancy in the Building Distribution, Campus Core, and Server Farm. In addition to the cost of duplicate components for redundancy, costs include the cables, routers, switches, software, and so forth.

Environmental Characteristics and Considerations

Environmental Characteristics and Considerations

![]() The campus environment, including the location of the network nodes, the distance between the nodes, and the transmission media used, influences the network topology. This section examines these considerations.

The campus environment, including the location of the network nodes, the distance between the nodes, and the transmission media used, influences the network topology. This section examines these considerations.

Network Geography Considerations

![]() The location of Enterprise Campus nodes and the distances between them determine the network’s geography.

The location of Enterprise Campus nodes and the distances between them determine the network’s geography.

![]() Nodes, including end-user workstations and servers, can be located in one or multiple buildings. Based on the location of nodes and the distance between them, the network designer decides which technology should interconnect them based on the required maximum speed, distance, and so forth.

Nodes, including end-user workstations and servers, can be located in one or multiple buildings. Based on the location of nodes and the distance between them, the network designer decides which technology should interconnect them based on the required maximum speed, distance, and so forth.

![]() Consider the following structures with respect to the network geography:

Consider the following structures with respect to the network geography:

![]() These geographic structures, described in the following sections, serve as guides to help determine Enterprise Campus transmission media and the logical modularization of the Enterprise Campus network.

These geographic structures, described in the following sections, serve as guides to help determine Enterprise Campus transmission media and the logical modularization of the Enterprise Campus network.

Intrabuilding Structure

![]() An intrabuilding campus network structure provides connectivity for all end nodes located in the same building and gives them access to the network resources. The Building Access and Building Distribution layers are typically located in the same building.

An intrabuilding campus network structure provides connectivity for all end nodes located in the same building and gives them access to the network resources. The Building Access and Building Distribution layers are typically located in the same building.

![]() User workstations are usually attached to the Building Access switches in the floor wiring closet with twisted-pair copper cables. Wireless LANs (WLAN) can also be used to provide intrabuilding connectivity, enabling users to establish and maintain a wireless network connection throughout—or between—buildings, without the limitations of wires or cables.

User workstations are usually attached to the Building Access switches in the floor wiring closet with twisted-pair copper cables. Wireless LANs (WLAN) can also be used to provide intrabuilding connectivity, enabling users to establish and maintain a wireless network connection throughout—or between—buildings, without the limitations of wires or cables.

| Note |

|

![]() Access layer switches usually connect to the Building Distribution switches over optical fiber, providing better transmission performance and less sensitivity to environmental disturbances than copper. Depending on the connectivity requirements to resources in other parts of the campus, the Building Distribution switches may be connected to Campus Core switches.

Access layer switches usually connect to the Building Distribution switches over optical fiber, providing better transmission performance and less sensitivity to environmental disturbances than copper. Depending on the connectivity requirements to resources in other parts of the campus, the Building Distribution switches may be connected to Campus Core switches.

Interbuilding Structure

![]() As shown in Figure 4-5, an interbuilding network structure provides connectivity between the individual campus buildings’ central switches (in the Building Distribution and/or Campus Core layers). These buildings are usually in close proximity, typically only a few hundred meters to a few kilometers apart.

As shown in Figure 4-5, an interbuilding network structure provides connectivity between the individual campus buildings’ central switches (in the Building Distribution and/or Campus Core layers). These buildings are usually in close proximity, typically only a few hundred meters to a few kilometers apart.

![]() Because the nodes in all campus buildings usually share common devices such as servers, the demand for high-speed connectivity between the buildings is high. Within a campus, companies might deploy their own physical transmission media. To provide high throughput without excessive interference from environmental conditions, optical fiber is the medium of choice between the buildings.

Because the nodes in all campus buildings usually share common devices such as servers, the demand for high-speed connectivity between the buildings is high. Within a campus, companies might deploy their own physical transmission media. To provide high throughput without excessive interference from environmental conditions, optical fiber is the medium of choice between the buildings.

![]() Depending on the connectivity requirements to resources in other parts of the campus, the Building Distribution switches might be connected to Campus Core switches.

Depending on the connectivity requirements to resources in other parts of the campus, the Building Distribution switches might be connected to Campus Core switches.

Distant Remote Building Structure

![]() When connecting buildings at distances that exceed a few kilometers (but still within a metropolitan area), the most important factor to consider is the physical media. The speed and cost of the network infrastructure depend heavily on the media selection.

When connecting buildings at distances that exceed a few kilometers (but still within a metropolitan area), the most important factor to consider is the physical media. The speed and cost of the network infrastructure depend heavily on the media selection.

![]() If the bandwidth requirements are higher than the physical connectivity options can support, the network designer must identify the organization’s critical applications and then select the equipment that supports intelligent network services—such as QoS and filtering capabilities—that allow optimal use of the bandwidth.

If the bandwidth requirements are higher than the physical connectivity options can support, the network designer must identify the organization’s critical applications and then select the equipment that supports intelligent network services—such as QoS and filtering capabilities—that allow optimal use of the bandwidth.

![]() Some companies might own their media, such as fiber, microwave, or copper lines. However, if the organization does not own physical transmission media to certain remote locations, the Enterprise Campus must connect through the Enterprise Edge using connectivity options from public service providers, such as traditional WAN links or Metro Ethernet.

Some companies might own their media, such as fiber, microwave, or copper lines. However, if the organization does not own physical transmission media to certain remote locations, the Enterprise Campus must connect through the Enterprise Edge using connectivity options from public service providers, such as traditional WAN links or Metro Ethernet.

![]() The risk of downtime and the service level agreements available from the service providers must also be considered. For example, inexpensive but unreliable and slowly repaired fiber is not desirable for mission-critical applications.

The risk of downtime and the service level agreements available from the service providers must also be considered. For example, inexpensive but unreliable and slowly repaired fiber is not desirable for mission-critical applications.

| Note |

|

Transmission Media Considerations

![]() An Enterprise Campus can use various physical media to interconnect devices. The type of cable is an important consideration when deploying a new network or upgrading an existing one. Cabling infrastructure represents a long-term investment—it is usually installed to last for ten years or more. The cost of the medium (including installation costs) and the available budget must be considered in addition to the technical characteristics such as signal attenuation and electromagnetic interference.

An Enterprise Campus can use various physical media to interconnect devices. The type of cable is an important consideration when deploying a new network or upgrading an existing one. Cabling infrastructure represents a long-term investment—it is usually installed to last for ten years or more. The cost of the medium (including installation costs) and the available budget must be considered in addition to the technical characteristics such as signal attenuation and electromagnetic interference.

![]() A network designer must be aware of physical media characteristics, because they influence the maximum distance permitted between devices and the network’s maximum transmission speed. Twisted-pair cables (copper), optical cables (fiber), and wireless (satellite, microwave, and Institute of Electrical and Electronics Engineers [IEEE] 802.11 LANs) are the most common physical transmission media used in modern networks.

A network designer must be aware of physical media characteristics, because they influence the maximum distance permitted between devices and the network’s maximum transmission speed. Twisted-pair cables (copper), optical cables (fiber), and wireless (satellite, microwave, and Institute of Electrical and Electronics Engineers [IEEE] 802.11 LANs) are the most common physical transmission media used in modern networks.

Copper

![]() Twisted-pair cables consist of four pairs of isolated wires that are wrapped together in plastic cable. With unshielded twisted-pair (UTP), no additional foil or wire is wrapped around the core wires. This makes these wires less expensive, but also less immune to external electromagnetic influences than shielded twisted-pair cables. Twisted-pair cabling is widely used to interconnect workstations, servers, or other devices from their network interface card (NIC) to the network connector at a wall outlet.

Twisted-pair cables consist of four pairs of isolated wires that are wrapped together in plastic cable. With unshielded twisted-pair (UTP), no additional foil or wire is wrapped around the core wires. This makes these wires less expensive, but also less immune to external electromagnetic influences than shielded twisted-pair cables. Twisted-pair cabling is widely used to interconnect workstations, servers, or other devices from their network interface card (NIC) to the network connector at a wall outlet.

![]() The characteristics of twisted-pair cable depend on the quality of the material from which they are made. As a result, twisted-pair cables are sorted into categories. Category 5 or greater is recommended for speeds of 100 megabits per second (Mbps) or higher. Category 6 is recommended for Gigabit Ethernet. Because of the possibility of signal attenuation in the wires, the maximum cable length is usually limited to 100 meters. One reason for this length limitation is collision detection. If one PC starts to transmit and another PC is more than 100 meters away, the second PC might not detect the signal on the wire and could therefore start to transmit at the same time, causing a collision on the wire.

The characteristics of twisted-pair cable depend on the quality of the material from which they are made. As a result, twisted-pair cables are sorted into categories. Category 5 or greater is recommended for speeds of 100 megabits per second (Mbps) or higher. Category 6 is recommended for Gigabit Ethernet. Because of the possibility of signal attenuation in the wires, the maximum cable length is usually limited to 100 meters. One reason for this length limitation is collision detection. If one PC starts to transmit and another PC is more than 100 meters away, the second PC might not detect the signal on the wire and could therefore start to transmit at the same time, causing a collision on the wire.

![]() One of the main considerations in network cabling design is electromagnetic interference. Due to high susceptibility to interference, twisted pair is not suitable for use in environments with electromagnetic influences. Similarly, twisted pair is not appropriate for environments that can be affected by the interference created by the cable itself.

One of the main considerations in network cabling design is electromagnetic interference. Due to high susceptibility to interference, twisted pair is not suitable for use in environments with electromagnetic influences. Similarly, twisted pair is not appropriate for environments that can be affected by the interference created by the cable itself.

| Note |

|

![]() Distances longer than 100 meters may require Long-Reach Ethernet (LRE). LRE is Cisco-proprietary technology that runs on voice-grade copper wires; it allows higher distances than traditional Ethernet and is used as an access technology in WANs. Chapter 5 further describes LRE.

Distances longer than 100 meters may require Long-Reach Ethernet (LRE). LRE is Cisco-proprietary technology that runs on voice-grade copper wires; it allows higher distances than traditional Ethernet and is used as an access technology in WANs. Chapter 5 further describes LRE.

Optical Fiber

![]() Typical requirements that lead to the selection of optical fiber cable as a transmission medium include distances longer than 100 meters and immunity to electromagnetic interference. Different types of optical cable exist; the two main types are multimode (MM) and single-mode (SM).

Typical requirements that lead to the selection of optical fiber cable as a transmission medium include distances longer than 100 meters and immunity to electromagnetic interference. Different types of optical cable exist; the two main types are multimode (MM) and single-mode (SM).

![]() Multimode fiber is optical fiber that carries multiple light waves or modes concurrently, each at a slightly different reflection angle within the optical fiber core. Because modes tend to disperse over longer lengths (modal dispersion), MM fiber transmission is used for relatively short distances. Typically, LEDs are used with MM fiber. The typical diameter of an MM fiber is 50 or 62.5 micrometers.

Multimode fiber is optical fiber that carries multiple light waves or modes concurrently, each at a slightly different reflection angle within the optical fiber core. Because modes tend to disperse over longer lengths (modal dispersion), MM fiber transmission is used for relatively short distances. Typically, LEDs are used with MM fiber. The typical diameter of an MM fiber is 50 or 62.5 micrometers.

![]() Single-mode (also known as monomode) fiber is optical fiber that carries a single wave (or laser) of light. Lasers are typically used with SM fiber. The typical diameter of an SM fiber core is between 2 and 10 micrometers. Single-mode fiber limits dispersion and loss of light, and therefore allows for higher transmission speeds, but it is more expensive than multimode fiber.

Single-mode (also known as monomode) fiber is optical fiber that carries a single wave (or laser) of light. Lasers are typically used with SM fiber. The typical diameter of an SM fiber core is between 2 and 10 micrometers. Single-mode fiber limits dispersion and loss of light, and therefore allows for higher transmission speeds, but it is more expensive than multimode fiber.

![]() Both MM and SM cables have lower loss of signal than copper cable. Therefore, optical cables allow longer distances between devices. Optical fiber cable has precise production and installation requirements; therefore, it costs more than twisted-pair cable.

Both MM and SM cables have lower loss of signal than copper cable. Therefore, optical cables allow longer distances between devices. Optical fiber cable has precise production and installation requirements; therefore, it costs more than twisted-pair cable.

![]() Optical fiber requires a precise technique for cable coupling. Even a small deviation from the ideal position of optical connectors can result in either a loss of signal or a large number of frame losses. Careful attention during optical fiber installation is imperative because of the traffic’s high sensitivity to coupling misalignment. In environments where the cable does not consist of a single fiber from point to point, coupling is required, and loss of signal can easily occur.

Optical fiber requires a precise technique for cable coupling. Even a small deviation from the ideal position of optical connectors can result in either a loss of signal or a large number of frame losses. Careful attention during optical fiber installation is imperative because of the traffic’s high sensitivity to coupling misalignment. In environments where the cable does not consist of a single fiber from point to point, coupling is required, and loss of signal can easily occur.

Wireless

![]() The inherent nature of wireless is that it does not require wires to carry information across geographic areas that are otherwise prohibitive to connect. WLANs can either replace a traditional wired network or extend its reach and capabilities. In-building WLAN equipment includes access points (AP) that perform functions similar to wired networking hubs, and PC client adapters. APs are distributed throughout a building to expand range and functionality for wireless clients. Wireless bridges and APs can also be used for interbuilding connectivity and outdoor wireless client access.

The inherent nature of wireless is that it does not require wires to carry information across geographic areas that are otherwise prohibitive to connect. WLANs can either replace a traditional wired network or extend its reach and capabilities. In-building WLAN equipment includes access points (AP) that perform functions similar to wired networking hubs, and PC client adapters. APs are distributed throughout a building to expand range and functionality for wireless clients. Wireless bridges and APs can also be used for interbuilding connectivity and outdoor wireless client access.

![]() Wireless clients supporting IEEE 802.11g allow speeds of up to 54 Mbps in the 2.4-GHz band over a range of about 100 feet. The IEEE 802.11b standard supports speeds of up to 11 Mbps in the 2.4-GHz band. The IEEE 802.11a standard supports speeds of up to 54 Mbps in the 5-GHz band.

Wireless clients supporting IEEE 802.11g allow speeds of up to 54 Mbps in the 2.4-GHz band over a range of about 100 feet. The IEEE 802.11b standard supports speeds of up to 11 Mbps in the 2.4-GHz band. The IEEE 802.11a standard supports speeds of up to 54 Mbps in the 5-GHz band.

| Note |

|

Transmission Media Comparison

![]() Table 4-2 presents various characteristics of the transmission media types.

Table 4-2 presents various characteristics of the transmission media types.

|

|

|

|

|

|

|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

![]() The parameters listed in Table 4-2 are as follows:

The parameters listed in Table 4-2 are as follows:

-

Distance: The maximum distance between network devices (such as workstations, servers, printers, and IP phones) and network nodes, and between network nodes. The distances supported with fiber vary, depending on whether it supports Fast Ethernet or Gigabit Ethernet, the type of fiber used, and the fiber interface used.

Distance: The maximum distance between network devices (such as workstations, servers, printers, and IP phones) and network nodes, and between network nodes. The distances supported with fiber vary, depending on whether it supports Fast Ethernet or Gigabit Ethernet, the type of fiber used, and the fiber interface used. -

Bandwidth: The required bandwidth in a particular segment of the network, or the connection speed between the nodes inside or outside the building.

Bandwidth: The required bandwidth in a particular segment of the network, or the connection speed between the nodes inside or outside the building.Note  The wireless throughput is significantly less than its maximum data rate due to the half-duplex nature of radio frequency technology.

The wireless throughput is significantly less than its maximum data rate due to the half-duplex nature of radio frequency technology. -

Price: Along with the price of the medium, the installation cost must be considered. For example, fiber installation costs are significantly higher than copper installation costs because of strict requirements for optical cable coupling.

Price: Along with the price of the medium, the installation cost must be considered. For example, fiber installation costs are significantly higher than copper installation costs because of strict requirements for optical cable coupling. -

Deployment area: Indicates whether wiring is for wiring closet only (where users access the network), for internode, or for interbuilding connections.

Deployment area: Indicates whether wiring is for wiring closet only (where users access the network), for internode, or for interbuilding connections. When deploying devices in an area with high electrical or magnetic interference—for example, in an industrial environment—you must pay special attention to media selection. In such environments, the disturbances might interfere with data transfer and therefore result in an increased number of frame errors. Electrical grounding can isolate some external disturbance, but the additional wiring increases costs. Fiber- optic installation is the only reasonable solution for such networks.

When deploying devices in an area with high electrical or magnetic interference—for example, in an industrial environment—you must pay special attention to media selection. In such environments, the disturbances might interfere with data transfer and therefore result in an increased number of frame errors. Electrical grounding can isolate some external disturbance, but the additional wiring increases costs. Fiber- optic installation is the only reasonable solution for such networks.

Cabling Example

![]() Figure 4-6 illustrates a typical campus network structure. End devices such as workstations, IP phones, and printers are no more than 100 m away from the LAN switch. UTP wiring can easily handle the required distance and speed; it is also easy to set up, and the price-performance ratio is reasonable.

Figure 4-6 illustrates a typical campus network structure. End devices such as workstations, IP phones, and printers are no more than 100 m away from the LAN switch. UTP wiring can easily handle the required distance and speed; it is also easy to set up, and the price-performance ratio is reasonable.

| Note |

|

![]() Optical fiber cables handle the higher speeds and distances that may be required among switch devices. MM optical cable is usually satisfactory inside the building. Depending on distance, organizations use MM or SM optical for interbuilding communication cable. If the distances are short (up to 500 m), MM fiber is a more reasonable solution for speeds up to 1 Gbps.

Optical fiber cables handle the higher speeds and distances that may be required among switch devices. MM optical cable is usually satisfactory inside the building. Depending on distance, organizations use MM or SM optical for interbuilding communication cable. If the distances are short (up to 500 m), MM fiber is a more reasonable solution for speeds up to 1 Gbps.

![]() However, an organization can install SM fiber if its requirements are for longer distances, or if there are plans for future higher speeds (for example, 10 Gbps).

However, an organization can install SM fiber if its requirements are for longer distances, or if there are plans for future higher speeds (for example, 10 Gbps).

| Note |

|

Infrastructure Device Characteristics and Considerations

Infrastructure Device Characteristics and Considerations

![]() Network end-user devices are commonly connected using switched technology rather than using a shared media segment. Switched technology provides dedicated network bandwidth for each device on the network. Switched networks can support network infrastructure services, such as QoS, security, and management; a shared media segment cannot support these features.

Network end-user devices are commonly connected using switched technology rather than using a shared media segment. Switched technology provides dedicated network bandwidth for each device on the network. Switched networks can support network infrastructure services, such as QoS, security, and management; a shared media segment cannot support these features.

![]() In the past, LAN switches were Layer 2–only devices. Data link layer (Layer 2) switching supports multiple simultaneous frame flows. Multilayer switching performs packet switching and several functions at Layer 3 and at higher Open Systems Interconnection (OSI) layers and can effectively replace routers in the LAN switched environment. Deciding whether to deploy pure data link layer switches or multilayer switches in the enterprise network is not a trivial decision. It requires a full understanding of the network topology and user demands.

In the past, LAN switches were Layer 2–only devices. Data link layer (Layer 2) switching supports multiple simultaneous frame flows. Multilayer switching performs packet switching and several functions at Layer 3 and at higher Open Systems Interconnection (OSI) layers and can effectively replace routers in the LAN switched environment. Deciding whether to deploy pure data link layer switches or multilayer switches in the enterprise network is not a trivial decision. It requires a full understanding of the network topology and user demands.

![]() When deciding on the type of switch to use and the features to be deployed in a network, consider the following factors:

When deciding on the type of switch to use and the features to be deployed in a network, consider the following factors:

-

Infrastructure service capabilities: The network services that the organization requires (IP multicast, QoS, and so on).

Infrastructure service capabilities: The network services that the organization requires (IP multicast, QoS, and so on). -

Size of the network segments: How the network is segmented and how many end devices will be connected, based on traffic characteristics.

Size of the network segments: How the network is segmented and how many end devices will be connected, based on traffic characteristics. -

Convergence time: The maximum amount of time the network will be unavailable in the event of network outages.

Convergence time: The maximum amount of time the network will be unavailable in the event of network outages. -

Cost: The budget for the network infrastructure. Note that multilayer switches are typically more expensive than their Layer 2 counterparts; however, multilayer functionality can be obtained by adding cards and software to a modular Layer 2 switch.

Cost: The budget for the network infrastructure. Note that multilayer switches are typically more expensive than their Layer 2 counterparts; however, multilayer functionality can be obtained by adding cards and software to a modular Layer 2 switch.

![]() The following sections examine the following infrastructure characteristics: convergence time, multilayer switching and Cisco Express Forwarding, IP multicast, QoS, and load sharing.

The following sections examine the following infrastructure characteristics: convergence time, multilayer switching and Cisco Express Forwarding, IP multicast, QoS, and load sharing.

Convergence Time

![]() Loop-prevention mechanisms in a Layer 2 topology cause the Spanning Tree Protocol (STP) to take between 30 and 50 seconds to converge. To eliminate STP convergence issues in the Campus Core, all the links connecting core switches should be routed links, not VLAN trunks. This also limits the broadcast and failure domains.

Loop-prevention mechanisms in a Layer 2 topology cause the Spanning Tree Protocol (STP) to take between 30 and 50 seconds to converge. To eliminate STP convergence issues in the Campus Core, all the links connecting core switches should be routed links, not VLAN trunks. This also limits the broadcast and failure domains.

| Note |

|

![]() In the case where multilayer switching is deployed everywhere, convergence is within seconds (depending on the routing protocol implemented) because all the devices detect their connected link failure immediately and act on it promptly (sending respective routing updates).

In the case where multilayer switching is deployed everywhere, convergence is within seconds (depending on the routing protocol implemented) because all the devices detect their connected link failure immediately and act on it promptly (sending respective routing updates).

![]() In a mixed Layer 2 and Layer 3 environment, the convergence time depends not only on the Layer 3 factors (including routing protocol timers such as hold-time and neighbor loss detection), but also on the STP convergence.

In a mixed Layer 2 and Layer 3 environment, the convergence time depends not only on the Layer 3 factors (including routing protocol timers such as hold-time and neighbor loss detection), but also on the STP convergence.

![]() Using multilayer switching in a structured design reduces the scope of spanning-tree domains. It is common to use a routing protocol, such as Enhanced Interior Gateway Routing Protocol (EIGRP) or Open Shortest Path First (OSPF), to handle load balancing, redundancy, and recovery in the Campus Core.

Using multilayer switching in a structured design reduces the scope of spanning-tree domains. It is common to use a routing protocol, such as Enhanced Interior Gateway Routing Protocol (EIGRP) or Open Shortest Path First (OSPF), to handle load balancing, redundancy, and recovery in the Campus Core.

Multilayer Switching and Cisco Express Forwarding

![]() As noted in Chapter 3, “Structuring and Modularizing the Network,” in this book the term multilayer switching denotes a switch’s generic capability to use information at different protocol layers as part of the switching process; the term Layer 3 switching is a synonym for multilayer switching in this context.

As noted in Chapter 3, “Structuring and Modularizing the Network,” in this book the term multilayer switching denotes a switch’s generic capability to use information at different protocol layers as part of the switching process; the term Layer 3 switching is a synonym for multilayer switching in this context.

![]() The use of protocol information from multiple layers in the switching process is implemented in two different ways within Cisco switches. The first way is called multilayer switching (MLS), and the second way is called Cisco Express Forwarding.

The use of protocol information from multiple layers in the switching process is implemented in two different ways within Cisco switches. The first way is called multilayer switching (MLS), and the second way is called Cisco Express Forwarding.

Multilayer Switching

![]() Multilayer switching, as its name implies, allows switching to take place at different protocol layers. Switching can be performed only on Layers 2 and 3, or it can also include Layer 4. MLS is based on network flows.

Multilayer switching, as its name implies, allows switching to take place at different protocol layers. Switching can be performed only on Layers 2 and 3, or it can also include Layer 4. MLS is based on network flows.

![]() The three major components of MLS are as follows:

The three major components of MLS are as follows:

-

MLS Route Processor (MLS-RP): The MLS-enabled router that performs the traditional function of routing between subnets

MLS Route Processor (MLS-RP): The MLS-enabled router that performs the traditional function of routing between subnets -

MLS Switching Engine (MLS-SE): The MLS-enabled switch that can offload some of the packet-switching functionality from the MLS-RP

MLS Switching Engine (MLS-SE): The MLS-enabled switch that can offload some of the packet-switching functionality from the MLS-RP -

Multilayer Switching Protocol (MLSP): Used by the MLS-RP and the MLS-SE to communicate with each other

Multilayer Switching Protocol (MLSP): Used by the MLS-RP and the MLS-SE to communicate with each other

Cisco Express Forwarding

![]() Cisco Express Forwarding, like MLS, aims to speed the data routing and forwarding process in a network. However, the two methods use different approaches.

Cisco Express Forwarding, like MLS, aims to speed the data routing and forwarding process in a network. However, the two methods use different approaches.

![]() Cisco Express Forwarding uses two components to optimize the lookup of the information required to route packets: the Forwarding Information Base (FIB) for the Layer 3 information and the adjacency table for the Layer 2 information.

Cisco Express Forwarding uses two components to optimize the lookup of the information required to route packets: the Forwarding Information Base (FIB) for the Layer 3 information and the adjacency table for the Layer 2 information.

![]() Cisco Express Forwarding creates an FIB by maintaining a copy of the forwarding information contained in the IP routing table. The information is indexed, so it is quick to search for matching entries as packets are processed. Whenever the routing table changes, the FIB is also changed so that it always contains up-to-date paths. A separate routing cache is not required.

Cisco Express Forwarding creates an FIB by maintaining a copy of the forwarding information contained in the IP routing table. The information is indexed, so it is quick to search for matching entries as packets are processed. Whenever the routing table changes, the FIB is also changed so that it always contains up-to-date paths. A separate routing cache is not required.

![]() The adjacency table contains Layer 2 frame header information, including next-hop addresses, for all FIB entries. Each FIB entry can point to multiple adjacency table entries—for example, if two paths exist between devices for load balancing.

The adjacency table contains Layer 2 frame header information, including next-hop addresses, for all FIB entries. Each FIB entry can point to multiple adjacency table entries—for example, if two paths exist between devices for load balancing.

![]() After a packet is processed and the route is determined from the FIB, the Layer 2 next-hop and header information is retrieved from the adjacency table, and the new frame is created to encapsulate the packet.

After a packet is processed and the route is determined from the FIB, the Layer 2 next-hop and header information is retrieved from the adjacency table, and the new frame is created to encapsulate the packet.

![]() Cisco Express Forwarding can be enabled on a router (for example, on a Cisco 7600 Series router) or on a switch with Layer 3 functionality (such as the Catalyst 6500 Series switch).

Cisco Express Forwarding can be enabled on a router (for example, on a Cisco 7600 Series router) or on a switch with Layer 3 functionality (such as the Catalyst 6500 Series switch).

| Note |

|

IP Multicast

![]() A traditional IP network is not efficient when sending the same data to many locations; the data is sent in unicast packets and therefore is replicated on the network for each destination. For example, if a CEO’s annual video address is sent out on a company’s network for all employees to watch, the same data stream must be replicated for each employee. Obviously, this would consume many resources, including precious WAN bandwidth.

A traditional IP network is not efficient when sending the same data to many locations; the data is sent in unicast packets and therefore is replicated on the network for each destination. For example, if a CEO’s annual video address is sent out on a company’s network for all employees to watch, the same data stream must be replicated for each employee. Obviously, this would consume many resources, including precious WAN bandwidth.

![]() IP multicast technology enables networks to send data to a group of destinations in the most efficient way. The data is sent from the source as one stream; this single data stream travels as far as it can in the network. Devices replicate the data only if they need to send it out on multiple interfaces to reach all members of the destination group.

IP multicast technology enables networks to send data to a group of destinations in the most efficient way. The data is sent from the source as one stream; this single data stream travels as far as it can in the network. Devices replicate the data only if they need to send it out on multiple interfaces to reach all members of the destination group.

![]() Multicast groups are identified by Class D IP addresses, which are in the range from 224.0.0.0 to 239.255.255.255. IP multicast involves some new protocols for network devices, including two for informing network devices which hosts require which multicast data stream and one for determining the best way to route multicast traffic. These three protocols are described in the following sections.

Multicast groups are identified by Class D IP addresses, which are in the range from 224.0.0.0 to 239.255.255.255. IP multicast involves some new protocols for network devices, including two for informing network devices which hosts require which multicast data stream and one for determining the best way to route multicast traffic. These three protocols are described in the following sections.

Internet Group Management Protocol and Cisco Group Management Protocol

![]() Internet Group Management Protocol (IGMP) is used between hosts and their local routers. Hosts register with the router to join (and leave) specific multicast groups; the router then knows that it needs to forward the data stream destined for a specific multicast group to the registered hosts.

Internet Group Management Protocol (IGMP) is used between hosts and their local routers. Hosts register with the router to join (and leave) specific multicast groups; the router then knows that it needs to forward the data stream destined for a specific multicast group to the registered hosts.

![]() In a typical network, hosts are not directly connected to routers but are connected to a Layer 2 switch, which is in turn connected to the router. IGMP is a network layer (Layer 3) protocol. Consequently, Layer 2 switches do not participate in IGMP and therefore are not aware of which hosts attached to them might be part of a particular multicast group. By default, Layer 2 switches flood multicast frames to all ports (except the port from which the frame originated), which means that all multicast traffic received by a switch would be sent out on all ports, even if only one device on one port required the data stream. Cisco therefore developed Cisco Group Management Protocol (CGMP), which is used between switches and routers. The routers tell each of their directly connected switches about IGMP registrations that were received from hosts through the switch—in other words, from hosts accessible through the switch. The switch then forwards the multicast traffic only to ports that those requesting hosts are on, rather than flooding the data to all ports. Switches, including non-Cisco switches, can alternatively use IGMP snooping to eavesdrop on the IGMP messages sent between routers and hosts to learn similar information.

In a typical network, hosts are not directly connected to routers but are connected to a Layer 2 switch, which is in turn connected to the router. IGMP is a network layer (Layer 3) protocol. Consequently, Layer 2 switches do not participate in IGMP and therefore are not aware of which hosts attached to them might be part of a particular multicast group. By default, Layer 2 switches flood multicast frames to all ports (except the port from which the frame originated), which means that all multicast traffic received by a switch would be sent out on all ports, even if only one device on one port required the data stream. Cisco therefore developed Cisco Group Management Protocol (CGMP), which is used between switches and routers. The routers tell each of their directly connected switches about IGMP registrations that were received from hosts through the switch—in other words, from hosts accessible through the switch. The switch then forwards the multicast traffic only to ports that those requesting hosts are on, rather than flooding the data to all ports. Switches, including non-Cisco switches, can alternatively use IGMP snooping to eavesdrop on the IGMP messages sent between routers and hosts to learn similar information.

![]() Figure 4-7 illustrates the interaction of these two protocols. Hosts A and D register, using IGMP, to join the multicast group to receive data from the server. The router informs both switches of these registrations using CGMP. When the router forwards the multicast data to the hosts, the switches ensure that the data goes out of only the ports on which hosts A and D are connected. The ports on which hosts B and C are connected do not receive the multicast data.

Figure 4-7 illustrates the interaction of these two protocols. Hosts A and D register, using IGMP, to join the multicast group to receive data from the server. The router informs both switches of these registrations using CGMP. When the router forwards the multicast data to the hosts, the switches ensure that the data goes out of only the ports on which hosts A and D are connected. The ports on which hosts B and C are connected do not receive the multicast data.

Protocol-Independent Multicast Routing Protocol

![]() Protocol-Independent Multicast (PIM) is used by routers that forward multicast packets. The protocol-independent part of the name indicates that PIM is independent of the unicast routing protocol (for example, EIGRP or OSPF) running in the network. PIM uses the normal routing table, populated by the unicast routing protocol, in its multicast routing calculations.

Protocol-Independent Multicast (PIM) is used by routers that forward multicast packets. The protocol-independent part of the name indicates that PIM is independent of the unicast routing protocol (for example, EIGRP or OSPF) running in the network. PIM uses the normal routing table, populated by the unicast routing protocol, in its multicast routing calculations.

| Note |

|

![]() Unlike other routing protocols, no routing updates are sent between PIM routers.

Unlike other routing protocols, no routing updates are sent between PIM routers.

![]() When a router forwards a unicast packet, it looks up the destination address in its routing table and forwards the packet out of the appropriate interface. However, when forwarding a multicast packet, the router might have to forward the packet out of multiple interfaces, toward all the receiving hosts. Multicast-enabled routers use PIM to dynamically create distribution trees that control the path that IP multicast traffic takes through the network to deliver traffic to all receivers.

When a router forwards a unicast packet, it looks up the destination address in its routing table and forwards the packet out of the appropriate interface. However, when forwarding a multicast packet, the router might have to forward the packet out of multiple interfaces, toward all the receiving hosts. Multicast-enabled routers use PIM to dynamically create distribution trees that control the path that IP multicast traffic takes through the network to deliver traffic to all receivers.

![]() The following two types of distribution trees exist:

The following two types of distribution trees exist:

-

Source tree: A source tree is created for each source sending to each multicast group. The source tree has its root at the source and has branches through the network to the receivers.

Source tree: A source tree is created for each source sending to each multicast group. The source tree has its root at the source and has branches through the network to the receivers. -

Shared tree: A shared tree is a single tree that is shared between all sources for each multicast group. The shared tree has a single common root, called a rendezvous point (RP).

Shared tree: A shared tree is a single tree that is shared between all sources for each multicast group. The shared tree has a single common root, called a rendezvous point (RP).

![]() Multicast routers consider the source address of the multicast packet as well as the destination address, and they use the distribution tree to forward the packet away from the source and toward the destination. Forwarding multicast traffic away from the source, rather than to the receiver, is called Reverse Path Forwarding (RPF). To avoid routing loops, RPF uses the unicast routing table to determine the upstream (toward the source) and downstream (away from the source) neighbors and ensures that only one interface on the router is considered to be an incoming interface for data from a specific source. For example, data received on one router interface and forwarded out another interface can loop around the network and come back into the same router on a different interface; RPF ensures that this data is not forwarded again.

Multicast routers consider the source address of the multicast packet as well as the destination address, and they use the distribution tree to forward the packet away from the source and toward the destination. Forwarding multicast traffic away from the source, rather than to the receiver, is called Reverse Path Forwarding (RPF). To avoid routing loops, RPF uses the unicast routing table to determine the upstream (toward the source) and downstream (away from the source) neighbors and ensures that only one interface on the router is considered to be an incoming interface for data from a specific source. For example, data received on one router interface and forwarded out another interface can loop around the network and come back into the same router on a different interface; RPF ensures that this data is not forwarded again.

![]() PIM operates in one of the following two modes:

PIM operates in one of the following two modes:

-

Sparse mode: This mode uses a “pull” model to send multicast traffic. Sparse mode uses a shared tree and therefore requires an RP to be defined. Sources register with the RP. Routers along the path from active receivers that have explicitly requested to join a specific multicast group register to join that group. These routers calculate, using the unicast routing table, whether they have a better metric to the RP or to the source itself; they forward the join message to the device with the better metric.

Sparse mode: This mode uses a “pull” model to send multicast traffic. Sparse mode uses a shared tree and therefore requires an RP to be defined. Sources register with the RP. Routers along the path from active receivers that have explicitly requested to join a specific multicast group register to join that group. These routers calculate, using the unicast routing table, whether they have a better metric to the RP or to the source itself; they forward the join message to the device with the better metric. -

Dense mode: This mode uses a “push” model that floods multicast traffic to the entire network. Dense mode uses source trees. Routers that have no need for the data (because they are not connected to receivers that want the data or to other routers that want it) request that the tree be pruned so that they no longer receive the data.

Dense mode: This mode uses a “push” model that floods multicast traffic to the entire network. Dense mode uses source trees. Routers that have no need for the data (because they are not connected to receivers that want the data or to other routers that want it) request that the tree be pruned so that they no longer receive the data.

QoS Considerations in LAN Switches

![]() A campus network transports many types of applications and data, which might include high-quality video and delay-sensitive data (such as real-time voice). Bandwidth-intensive applications enhance many business processes but might also stretch network capabilities and resources. Networks must provide secure, predictable, measurable, and sometimes guaranteed services. Achieving the required QoS by managing delay, delay variation (jitter), bandwidth, and packet loss parameters on a network can be the key to a successful end-to-end business solution. QoS mechanisms are techniques used to manage network resources.

A campus network transports many types of applications and data, which might include high-quality video and delay-sensitive data (such as real-time voice). Bandwidth-intensive applications enhance many business processes but might also stretch network capabilities and resources. Networks must provide secure, predictable, measurable, and sometimes guaranteed services. Achieving the required QoS by managing delay, delay variation (jitter), bandwidth, and packet loss parameters on a network can be the key to a successful end-to-end business solution. QoS mechanisms are techniques used to manage network resources.

![]() The assumption that a high-capacity, nonblocking switch with multigigabit backplanes never needs QoS is incorrect. Many networks or individual network elements are oversubscribed; it is easy to create scenarios in which congestion can potentially occur and that therefore require some form of QoS. The sum of the bandwidths on all ports on a switch where end devices are connected is usually greater than that of the uplink port; when the access ports are fully used, congestion on the uplink port is unavoidable. Uplinks from the Building Access layer to the Building Distribution layer, or from the Building Distribution layer to the Campus Core layer, most often require QoS. Depending on traffic flow and uplink oversubscription, bandwidth is managed with QoS mechanisms on the Building Access, Building Distribution, or even Campus Core switches.

The assumption that a high-capacity, nonblocking switch with multigigabit backplanes never needs QoS is incorrect. Many networks or individual network elements are oversubscribed; it is easy to create scenarios in which congestion can potentially occur and that therefore require some form of QoS. The sum of the bandwidths on all ports on a switch where end devices are connected is usually greater than that of the uplink port; when the access ports are fully used, congestion on the uplink port is unavoidable. Uplinks from the Building Access layer to the Building Distribution layer, or from the Building Distribution layer to the Campus Core layer, most often require QoS. Depending on traffic flow and uplink oversubscription, bandwidth is managed with QoS mechanisms on the Building Access, Building Distribution, or even Campus Core switches.

QoS Mechanisms

![]() QoS mechanisms or tools implemented on LAN switches include the following:

QoS mechanisms or tools implemented on LAN switches include the following:

-

Classification and marking: Packet classification is the process of partitioning traffic into multiple priority levels, or classes of service. Information in the frame or packet header is inspected, and the frame’s priority is determined. Marking is the process of changing the priority or class of service (CoS) setting within a frame or packet to indicate its classification.

Classification and marking: Packet classification is the process of partitioning traffic into multiple priority levels, or classes of service. Information in the frame or packet header is inspected, and the frame’s priority is determined. Marking is the process of changing the priority or class of service (CoS) setting within a frame or packet to indicate its classification. For IEEE 802.1Q frames, the 3 user priority bits in the Tag field—commonly referred to as the 802.1p bits—are used as CoS bits. However, Layer 2 markings are not useful as end-to-end QoS indicators, because the medium often changes throughout a network (for example, from Ethernet to a Frame Relay WAN). Thus, Layer 3 markings are required to support end-to-end QoS.

For IEEE 802.1Q frames, the 3 user priority bits in the Tag field—commonly referred to as the 802.1p bits—are used as CoS bits. However, Layer 2 markings are not useful as end-to-end QoS indicators, because the medium often changes throughout a network (for example, from Ethernet to a Frame Relay WAN). Thus, Layer 3 markings are required to support end-to-end QoS. For IPv4, Layer 3 marking can be done using the 8-bit type of service (ToS) field in the packet header. Originally, only the first 3 bits were used; these bits are called the IP Precedence bits. Because 3 bits can specify only eight marking values, IP precedence does not allow a granular classification of traffic. Thus, more bits are now used: the first 6 bits in the TOS field are now known as the DiffServ Code Point (DSCP) bits.

For IPv4, Layer 3 marking can be done using the 8-bit type of service (ToS) field in the packet header. Originally, only the first 3 bits were used; these bits are called the IP Precedence bits. Because 3 bits can specify only eight marking values, IP precedence does not allow a granular classification of traffic. Thus, more bits are now used: the first 6 bits in the TOS field are now known as the DiffServ Code Point (DSCP) bits. -

Congestion management: Queuing: Queuing separates traffic into various queues or buffers; the marking in the frame or packet can be used to determine which queue traffic goes in. A network interface is often congested (even at high speeds, transient congestion is observed); queuing techniques ensure that traffic from the critical applications is forwarded appropriately. For example, real-time applications such as VoIP and stock trading might have to be forwarded with the least latency and jitter.

Congestion management: Queuing: Queuing separates traffic into various queues or buffers; the marking in the frame or packet can be used to determine which queue traffic goes in. A network interface is often congested (even at high speeds, transient congestion is observed); queuing techniques ensure that traffic from the critical applications is forwarded appropriately. For example, real-time applications such as VoIP and stock trading might have to be forwarded with the least latency and jitter. -

Congestion Management: Scheduling: Scheduling is the process that determines the order in which queues are serviced.

Congestion Management: Scheduling: Scheduling is the process that determines the order in which queues are serviced. -

Policing and shaping: Policing and shaping tools identify traffic that violates some threshold level and reduces a stream of data to a predetermined rate or level. Traffic shaping buffers the frames for a short time. Policing simply drops or lowers the priority of the frame that is out of profile.

Policing and shaping: Policing and shaping tools identify traffic that violates some threshold level and reduces a stream of data to a predetermined rate or level. Traffic shaping buffers the frames for a short time. Policing simply drops or lowers the priority of the frame that is out of profile.

| Note |

|

QoS in LAN Switches

![]() When configuring QoS features, classify the specific network traffic, prioritize and mark it according to its relative importance, and use congestion management and policing and shaping techniques to provide preferential treatment. Implementing QoS in the network makes network performance more predictable and bandwidth use more effective. Figure 4-8 illustrates where the various categories of QoS may be implemented in LAN switches.

When configuring QoS features, classify the specific network traffic, prioritize and mark it according to its relative importance, and use congestion management and policing and shaping techniques to provide preferential treatment. Implementing QoS in the network makes network performance more predictable and bandwidth use more effective. Figure 4-8 illustrates where the various categories of QoS may be implemented in LAN switches.

![]() Data link layer switches are commonly used in the Building Access layer. Because they do not have knowledge of Layer 3 or higher information, these switches provide QoS classification and marking based only on the switch’s input port or MAC address. For example, traffic from a particular host can be defined as high-priority traffic on the uplink port. Multilayer switches may be used in the Building Access layer if Layer 3 services are required.

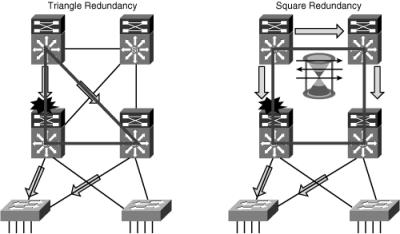

Data link layer switches are commonly used in the Building Access layer. Because they do not have knowledge of Layer 3 or higher information, these switches provide QoS classification and marking based only on the switch’s input port or MAC address. For example, traffic from a particular host can be defined as high-priority traffic on the uplink port. Multilayer switches may be used in the Building Access layer if Layer 3 services are required.